本帖最后由 pig2 于 2014-11-21 14:15 编辑

问题导读

1.你认为OpenStack Icehouse for RHEL6.5安装需要几步?

2.软件仓库如何配置,配置的作用是什么?

3.防火墙需要哪些规则?

4.openstack组件的安装,你对这些组件的认识什么?

环境说明

采用三台物理服务器或虚拟机作为本次测试环境,三台机器分别用作为控制节点、计算节点和网络节点。总体架构图如下:

控制节点用于安装MySQL、Rabbitmq、KeyStone、Glance、Swift、Cinder、memcached、Dashboard。建议配置2CPU、2G内存、1块网卡和20G磁盘空间。

计算节点用于安装Nova,运行虚拟机。计算节点需要能够运行虚拟机,所以需要采用物理服务器,或者是能够启动虚拟机的虚拟机,例如在VMWare Workstartion上安装虚拟机以后,透传VT过去以后,虚拟机里面还可以再起虚拟机。建议配置4CPU、4G内存、1块网卡和10G磁盘空间。

网络节点用于安装Neutron,为整个平台提供网络服务。建议配置2CPU、1G内存、2块网卡和10G磁盘空间。

三台服务器主机名和IP地址对应如下:

主机名controller,eth0 192.168.1.233/255.255.255.0

主机名compute,eth0 192.168.1.232/255.255.255.0

主机名network, eth0 192.168.1.231/255.255.255.0

三台服务器的eth0会作为内网,用于openstack组件之间的通讯,eth1会作为外网。

操作系统准备工作

操作系统安装

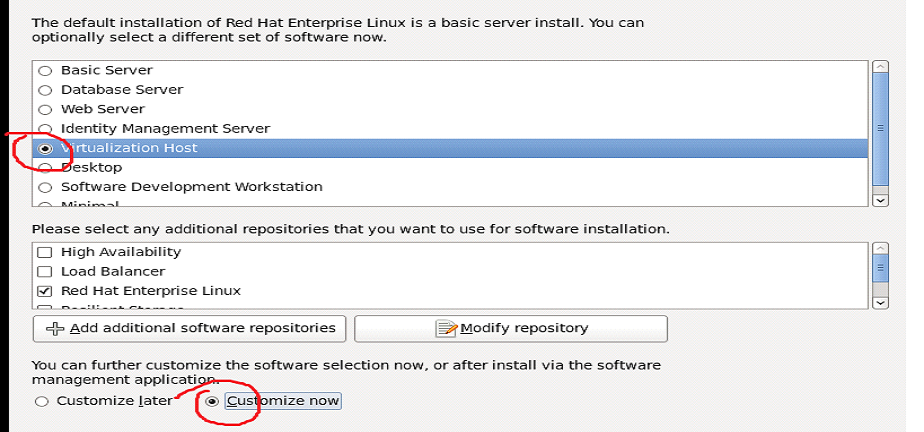

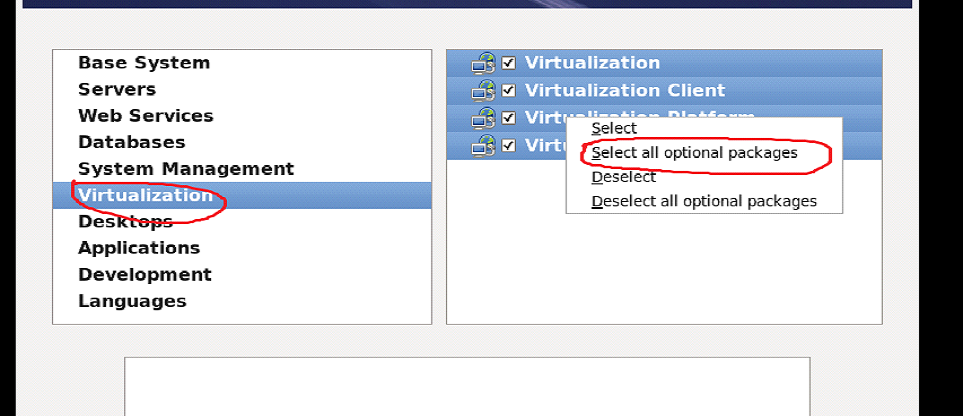

三台机器都需要安装RedHat Enterprise Linux 6.5 x86_64位,选择时区为上海,并去掉UTC时间,在选择安装包时,选择Virtualization Host,并将虚拟化的包全部安装上,如下图:

修改主机名,检查网卡的配置文件

修改主机名

[root@controller ~]# cat /etc/sysconfig/network

NETWORKING=yes

HOSTNAME=controller

检查网卡的配置,并重启网络service network restart

[root@controller ~]# cat /etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=no

BOOTPROTO=static

IPADDR=192.168.1.233

NETMASK=255.255.255.0

编辑/etc/sysctl.conf文件,修改net.ipv4.ip_forward为1(IP路由转发和桥上面的iptables生效)

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-arptables = 1

编辑/etc/security/limits.conf文件,添加: (打开文件最大数)

* soft nproc 65535

* hard nproc 65535

* soft nofile 65535

* hard nofile 65535

* soft core ulimit

* hard core ulimit

编辑/etc/selinux/config文件,关闭selinux

SELINUX=disabled

重启服务器

删除libvirt自带的bridge,准备使用openvswitch

mv /etc/libvirt/qemu/networks/default.xml /etc/libvirt/qemu/networks/default.xml.bak

modprobe -r bridge

删除 /etc/udev/rules.d/70-persistent-net.rules

关闭服务器

克隆出2台服务器 用作 compute network

修改compute和network主机名和网络信息

/etc/sysconfig/network

/etc/sysconfig/network-scripts/ifcfg-eth0

重启服务器

软件仓库配置

默认选择控制节点作为软件仓库服务器;其他节点通过http来访问

将光盘rhel6.5光盘挂载到服务器上比如 mount /dev/cdrom /mnt vi /etc/yum.repos.d/rhel65.repo

[rhel65_Server]

name=RHEL65_Server

baseurl=file:///mnt/Server

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

yum install httpd

将把实验提供的软件仓库rabbitmq、redhat-openstack-icehouse拷贝到/var/www/html目录制作yum源

cp redhat-openstack-icehouse.tar /var/www/html/

cp epel-depends.tar /var/www/html

cd /var/www/html

tar zxvf redhat-openstack-icehouse.tar

tar zxvf epel-depends.tar

新建rhel65目录,将rhel65 光盘里面的内容拷贝进去

mkdir rhel65

cp -r /mnt/* /var/www/html/rhel65/

将icehouse.repo rabbitmq.repo rhel65.repo epel-depends.repo文件,拷贝到/etc/yum.repos.d/ 目录

启动httpd服务

service httpd start

chkconfig httpd on

curl http://192.168.1.233/rabbitmq/

检查repo是否可以访问。

Cinder EBS块存储服务和Swift 对象存储服务准备工作

在控制节点Controller上,新加一块盘10G,单独做一个VG,VG名称为vgstorage,此VG将会被分配给Cinder和Swift使用;

假设新加的盘为 sdb

pvcreate /dev/sdb

vgcreate vgstorage /dev/sdb

控制节点Controller安装 192.168.1.233

防火墙配置

编辑 /etc/sysconfig/iptables 文件,在filter 中添加防火墙规则。

-I INPUT -p tcp --dport 80 -j ACCEPT

-I INPUT -p tcp --dport 3306 -j ACCEPT

-I INPUT -p tcp --dport 5000 -j ACCEPT

-I INPUT -p tcp --dport 5672 -j ACCEPT

-I INPUT -p tcp --dport 8080 -j ACCEPT

-I INPUT -p tcp --dport 8773 -j ACCEPT

-I INPUT -p tcp --dport 8774 -j ACCEPT

-I INPUT -p tcp --dport 8775 -j ACCEPT

-I INPUT -p tcp --dport 8776 -j ACCEPT

-I INPUT -p tcp --dport 8777 -j ACCEPT

-I INPUT -p tcp --dport 9292 -j ACCEPT

-I INPUT -p tcp --dport 9696 -j ACCEPT

-I INPUT -p tcp --dport 15672 -j ACCEPT

-I INPUT -p tcp --dport 55672 -j ACCEPT

-I INPUT -p tcp --dport 35357 -j ACCEPT

-I INPUT -p tcp --dport 12211 -j ACCEPT

重新启动防火墙 /etc/init.d/iptables restart

Rabbitmq消息队列安装

安装rabbitmq软件

导入KEY 进入 cd /var/www/html/rabbitmq

rpm --import rabbitmq-signing-key-public.asc

yum install rabbitmq-server

编辑/etc/hosts 文件,在127.0.0.1后面添加controller主机名称

例如,cat /etc/hosts

127.0.0.1 controller localhost.localdomain localhost4 localhost4.localdomain4

新建 /etc/rabbitmq/enabled_plugins文件,添加一行下面的内容,千万要注意后面的.,这个.不能遗漏。

[rabbitmq_management].

设置rabbitmq服务开机自启动

chkconfig rabbitmq-server on

启动rabbitmq服务

service rabbitmq-server start

完成后访问 http://192.168.1.233:15672 guest/guest 可以监控状态

MySQL数据库安装

mysql软件安装

yum install mysql mysql-server

修改编码格式为UTF8

vi /etc/my.cnf

在[mysqld]下面加入

default-character-set=utf8

default-storage-engine=InnoDB

启动mysql服务器

/etc/init.d/mysqld start

设置MySQL服务器开机自动启动

chkconfig mysqld on

修改mysql数据库root密码为openstack

/usr/bin/mysqladmin -u root password 'openstack'

KeyStone安装

KeyStone软件安装

导入KEY cd /var/www/html/rdo-icehouse-b3

rpm --import RPM-GPG-KEY-RDO-Icehouse

yum install -y openstack-keystone openstack-utils

创建KeyStone的服务TOKEN

export SERVICE_TOKEN=$(openssl rand -hex 10)

echo $SERVICE_TOKEN >/root/ks_admin_token

配置keystone.conf文件,使用UUID认证

openstack-config --set /etc/keystone/keystone.conf DEFAULT admin_token $SERVICE_TOKEN

openstack-config --set /etc/keystone/keystone.conf token provider keystone.token.providers.uuid.Provider;

openstack-config --set /etc/keystone/keystone.conf sql connection mysql://keystone:keystone@192.168.1.233/keystone;

同步keystone数据

openstack-db --init --service keystone --password keystone --rootpw openstack;

修改/etc/keystone目录属性,并启动keystone服务

chown -R keystone:keystone /etc/keystone

/etc/init.d/openstack-keystone start

设置openstack-keystone服务开机自启动

chkconfig openstack-keystone on

创建keystone service

export SERVICE_TOKEN=`cat /root/ks_admin_token`

export SERVICE_ENDPOINT=http://192.168.1.233:35357/v2.0

keystone service-create --name=keystone --type=identity --description="Keystone Identity Service"

输出结果如下:

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | Keystone Identity Service |

| id | 78286cee575e42e4aa1b35d8ebf95444 |

| name | keystone |

| type | identity |

+-------------+----------------------------------+

创建KeyStone endporint

keystone endpoint-create --service keystone --publicurl 'http://192.168.1.233:5000/v2.0' --adminurl 'http://192.168.1.233:35357/v2.0' --internalurl 'http://192.168.1.233:5000/v2.0' --region beijing

输出结果如下:

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| adminurl | http://192.168.1.233:35357/v2.0 |

| id | 90d6a35d55c14d598993aff16765c9ad |

| internalurl | http://192.168.1.233:5000/v2.0 |

| publicurl | http://192.168.1.233:5000/v2.0 |

| region | beijing |

| service_id | 78286cee575e42e4aa1b35d8ebf95444 |

+-------------+----------------------------------+

创建Keystone租户admin, 用户admin,角色admin

keystone user-create --name admin --pass openstack

输出结果如下:

+----------+----------------------------------+

| Property | Value |

+----------+----------------------------------+

| email | |

| enabled | True |

| id | c2df4ce7c2db4bc38abbd19c7bb462ff |

| name | admin |

| tenantId | |

+----------+----------------------------------+

keystone role-create --name admin

输出结果如下:

+----------+----------------------------------+

| Property | Value |

+----------+----------------------------------+

| id | 267b8d33846e4b66bd45c821ff02ada2 |

| name | admin |

+----------+----------------------------------+

keystone tenant-create --name admin

输出结果如下:

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | |

| enabled | True |

| id | a85bdecd1f164f91a4cc0470ec7fc28e |

| name | admin |

+-------------+----------------------------------+

把admin租户、用户和角色进行关联。

keystone user-role-add –user admin --role admin --tenant admin

编辑KeyStone操作的环境变量

vi /root/keystone_admin

export OS_USERNAME=admin

export OS_TENANT_NAME=admin

export OS_PASSWORD=openstack

export OS_AUTH_URL=http://192.168.1.233:35357/v2.0/

export PS1='[\u@\h \W(keystone_admin)]\$ '

创建一个Member的角色

重新ssh登录controler节点

source /root/keystone_admin

keystone role-create --name Member

输出结果如下:

+----------+----------------------------------+

| Property | Value |

+----------+----------------------------------+

| id | b0d8baf21db84d60ba666fc7c88b4e8c |

| name | Member |

+----------+----------------------------------+

创建TenantA, userA, TenantB, userB,都属于Member角色

keystone user-create --name usera --pass openstack

keystone tenant-create --name tenanta

keystone user-role-add --user usera --role Member --tenant tenanta

keystone user-create --name userb --pass openstack

keystone tenant-create --name tenantb

keystone user-role-add --user userb --role Member --tenant tenantb

检查用户是否创建成功

keystone user-list

输出结果如下:

+----------------------------------+-------+---------+-------+

| id | name | enabled | email |

+----------------------------------+-------+---------+-------+

| c2df4ce7c2db4bc38abbd19c7bb462ff | admin | True | |

| 38ef2a5c52ac4083a775442d1efba632 | usera | True | |

| f13e1e829b3e4151ad2465f32218871d | userb | True | |

+----------------------------------+-------+---------+-------+

Glance安装

安装Glance软件包

yum install -y openstack-glance openstack-utils python-kombu python-anyjson

在KeyStone里面创建glance服务和endpoint

keystone service-create --name glance --type image --description "Glance Image Service"

输出结果如下:

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | Glance Image Service |

| id | 47677b41fd0d475790e345b30e9cc1b9 |

| name | glance |

| type | image |

+-------------+----------------------------------+

keystone endpoint-create --service glance --publicurl "http://192.168.1.233:9292" --adminurl "http://192.168.1.233:9292" --internalurl "http://192.168.1.233:9292" --region beijing

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| adminurl | http://192.168.1.233:9292 |

| id | bfeb1ee1e8cf475c9da0c4519b038cb5 |

| internalurl | http://192.168.1.233:9292 |

| publicurl | http://192.168.1.233:9292 |

| region | beijing |

| service_id | 47677b41fd0d475790e345b30e9cc1b9 |

+-------------+----------------------------------+

设置glance配置文件

openstack-config --set /etc/glance/glance-api.conf DEFAULT sql_connection mysql://glance:glance@192.168.1.233/glance

openstack-config --set /etc/glance/glance-registry.conf DEFAULT sql_connection mysql://glance:glance@192.168.1.233/glance

openstack-config --set /etc/glance/glance-api.conf paste_deploy flavor keystone

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_host 192.168.1.233

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_port 35357

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken auth_protocol http

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken admin_tenant_name admin

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken admin_user admin

openstack-config --set /etc/glance/glance-api.conf keystone_authtoken admin_password openstack

openstack-config --set /etc/glance/glance-registry.conf paste_deploy flavor keystone

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_host 192.168.1.233

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_port 35357

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken auth_protocol http

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken admin_tenant_name admin

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken admin_user admin

openstack-config --set /etc/glance/glance-registry.conf keystone_authtoken admin_password openstack

openstack-config --set /etc/glance/glance-api.conf DEFAULT notifier_strategy noop;

初始化数据库

openstack-db --init --service glance --password glance --rootpw openstack;

修改glance配置文件的权限

chown -R glance:glance /etc/glance

chown -R glance:glance /var/lib/glance

chown -R glance:glance /var/log/glance

设置glance服务开机自启动

chkconfig openstack-glance-api on

chkconfig openstack-glance-registry on

启动glance服务

service openstack-glance-api start

service openstack-glance-registry start

检查glance服务是否正常工作

source /root/keystone_admin

glance image-list

注册一个Linux测试模版

首先上传 cirros-0.3.1-x86_64-disk.img 测试镜像

glance image-create --name "cirros-0.3.1-x86_64" --disk-format qcow2 --container-format bare --is-public true --file cirros-0.3.1-x86_64-disk.img

返回结果如下:

+------------------+--------------------------------------+

| Property | Value |

+------------------+--------------------------------------+

| checksum | 50bdc35edb03a38d91b1b071afb20a3c |

| container_format | bare |

| created_at | 2013-09-06T12:58:20 |

| deleted | False |

| deleted_at | None |

| disk_format | qcow2 |

| id | c9bab82e-a3b4-4bd3-852a-ddb8338fc5bb |

| is_public | True |

| min_disk | 0 |

| min_ram | 0 |

| name | cirros-0.3.0-x86_64 |

| owner | 2b60fd75ce3947218208250a97e2d3f6 |

| protected | False |

| size | 9761280 |

| status | active |

| updated_at | 2013-09-06T12:58:20 |

+------------------+--------------------------------------+

检查模版文件是否注册成功

glance image-list

返回结果如下:

ID Name Disk Format Container Format Size

------------------------------------ ------------------------------ --------------------

138c0f68-b40a-4778-a076-3eb2d1e025c9 cirros-0.3.1-x86_64 qcow2 bare 237371392

镜像文件最终存放在 /var/lib/glance/images

Cinder安装

安装Cinder软件包;

yum install openstack-cinder openstack-utils python-kombu python-amqplib

在KeyStone里面创建cinder服务和endpoint

source /root/keystone_admin

keystone service-create --name cinder --type volume --description "Cinder Volume Service"

返回结果如下:

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | Cinder Volume Service |

| id | b221db9181164fe29bcceaccf234289b |

| name | cinder |

| type | volume |

+-------------+----------------------------------+

keystone endpoint-create --service-id cinder --publicurl "http://192.168.1.233:8776/v1/\$(tenant_id)s" --adminurl "http://192.168.1.233:8776/v1/\$(tenant_id)s" --internalurl "http://192.168.1.233:8776/v1/\$(tenant_id)s" --region beijing

返回结果如下:

+-------------+--------------------------------------------+

| Property | Value |

+-------------+--------------------------------------------+

| adminurl | http://192.168.1.233:8776/v1/$(tenant_id)s |

| id | a10f9512b9aa46ec9e7fce1702f156f0 |

| internalurl | http://192.168.1.233:8776/v1/$(tenant_id)s |

| publicurl | http://192.168.1.233:8776/v1/$(tenant_id)s |

| region | beijing |

| service_id | b221db9181164fe29bcceaccf234289b |

+-------------+--------------------------------------------+

修改Cinder的配置文件,在配置文件里面指定了keystone的设置,cinder采用的逻辑卷的名称vgstorage,和指定了cinder服务器的ip地址

openstack-config --set /etc/cinder/cinder.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/cinder/cinder.conf DEFAULT sql_connection mysql://cinder:cinder@192.168.1.233/cinder

openstack-config --set /etc/cinder/cinder.conf DEFAULT volume_group vgstorage

openstack-config --set /etc/cinder/cinder.conf DEFAULT volume_driver cinder.volume.drivers.lvm.LVMISCSIDriver

openstack-config --set /etc/cinder/cinder.conf DEFAULT rpc_backend cinder.openstack.common.rpc.impl_kombu

openstack-config --set /etc/cinder/cinder.conf DEFAULT my_ip 192.168.1.233

openstack-config --set /etc/cinder/cinder.conf DEFAULT rabbit_host 192.168.1.233

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken auth_host 192.168.1.233

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken admin_tenant_name admin

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken admin_user admin

openstack-config --set /etc/cinder/cinder.conf keystone_authtoken admin_password openstack

初始化数据库

openstack-db --init --service cinder --password cinder --rootpw openstack;

修改配置文件和日志文件目录属性

chown -R cinder:cinder /etc/cinder

chown -R cinder:cinder /var/lib/cinder

chown -R cinder:cinder /var/log/cinder

配置iSCSI服务,配合Cinder,将Cinder创建的逻辑卷通过iscsi挂载给虚拟机

vi /etc/tgt/targets.conf,添加一行:

include /etc/cinder/volumes/*

设置tgtd服务开机自启动,并启动tgtd服务

chkconfig tgtd on

service tgtd start

设置Cinder服务开机自启动,并启动Cinder服务

chkconfig openstack-cinder-api on

chkconfig openstack-cinder-scheduler on

chkconfig openstack-cinder-volume on

service openstack-cinder-api start

service openstack-cinder-scheduler start

service openstack-cinder-volume start

通过cinder的命令来进行验证

cinder list 查看目前的EBS块存储

cinder create --display-name=vol-1G 1 ###创建1G的块存储

lvdisplay

当将该卷绑定到VM上面后可以查看:

tgtadm --lld iscsi --op show --mode target

Swift安装

安装软件包

yum install xfsprogs openstack-swift-proxy openstack-swift-object openstack-swift-container openstack-swift-account openstack-utils memcached

准备工作

注释:实际生产环境的话,此处的VG最好和上面的cinder使用的VG不同。自己另外创建一个VG

准备一个单独的逻辑卷或者磁盘,假设逻辑卷是/dev/vgstorage/lvswift

lvcreate --size 5G --name lvswift vgstorage

mkfs.xfs -f -i size=1024 /dev/vgstorage/lvswift

mkdir /mnt/sdb1

mount /dev/vgstorage/lvswift /mnt/sdb1

vi /etc/fstab

加入 /dev/vgstorage/lvswift /mnt/sdb1 xfs defaults 0 0

mkdir /mnt/sdb1/1 /mnt/sdb1/2 /mnt/sdb1/3 /mnt/sdb1/4

for x in {1..4}; do ln -s /mnt/sdb1/$x /srv/$x; done

mkdir -p /etc/swift/object-server /etc/swift/container-server /etc/swift/account-server /srv/1/node/sdb1 /srv/2/node/sdb2 /srv/3/node/sdb3 /srv/4/node/sdb4 /var/run/swift

chown -R swift:swift /etc/swift/ /srv/ /var/run/swift/ /mnt/sdb1

在KeyStone里面配置服务和endpoint地址

keystone service-create --name swift --type object-store --description "Swift Storage Service"

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | Swift Storage Service |

| id | 0974b03758e74c188ab6503df4e1ac80 |

| name | swift |

| type | object-store |

+-------------+----------------------------------+

keystone endpoint-create --service swift --publicurl "http://192.168.1.233:8080/v1/AUTH_%(tenant_id)s" --adminurl "http://192.168.1.233:8080/v1/AUTH_%(tenant_id)s" --internalurl "http://192.168.1.233:8080/v1/AUTH_%(tenant_id)s" --region beijing

+-------------+-------------------------------------------------+

| Property | Value |

+-------------+-------------------------------------------------+

| adminurl | http://192.168.1.233:8080/v1/AUTH_%(tenant_id)s |

| id | 08308f2c5a4f409b95a550b09cca686a |

| internalurl | http://192.168.1.233:8080/v1/AUTH_%(tenant_id)s |

| publicurl | http://192.168.1.233:8080/v1/AUTH_%(tenant_id)s |

| region | beijing |

| service_id | 0974b03758e74c188ab6503df4e1ac80 |

+-------------+-------------------------------------------------+

修改/etc/xinetd.d/rsync文件

disable = no

新建文件/etc/rsyncd.conf,文件内容如下

uid = swift

gid = swift

log file = /var/log/rsyncd.log

pid file = /var/run/rsyncd.pid

address = 127.0.0.1

[account6012]

max connections = 25

path = /srv/1/node/

read only = false

lock file = /var/lock/account6012.lock

[account6022]

max connections = 25

path = /srv/2/node/

read only = false

lock file = /var/lock/account6022.lock

[account6032]

max connections = 25

path = /srv/3/node/

read only = false

lock file = /var/lock/account6032.lock

[account6042]

max connections = 25

path = /srv/4/node/

read only = false

lock file = /var/lock/account6042.lock

[container6011]

max connections = 25

path = /srv/1/node/

read only = false

lock file = /var/lock/container6011.lock

[container6021]

max connections = 25

path = /srv/2/node/

read only = false

lock file = /var/lock/container6021.lock

[container6031]

max connections = 25

path = /srv/3/node/

read only = false

lock file = /var/lock/container6031.lock

[container6041]

max connections = 25

path = /srv/4/node/

read only = false

lock file = /var/lock/container6041.lock

[object6010]

max connections = 25

path = /srv/1/node/

read only = false

lock file = /var/lock/object6010.lock

[object6020]

max connections = 25

path = /srv/2/node/

read only = false

lock file = /var/lock/object6020.lock

[object6030]

max connections = 25

path = /srv/3/node/

read only = false

lock file = /var/lock/object6030.lock

[object6040]

max connections = 25

path = /srv/4/node/

read only = false

lock file = /var/lock/object6040.lock

新建文件/etc/rsyslog.d/10-swift.conf,内容如下

# Uncomment the following to have a log containing all logs together

#local1,local2,local3,local4,local5.* /var/log/swift/all.log

# Uncomment the following to have hourly proxy logs for stats processing

#$template HourlyProxyLog,"/var/log/swift/hourly/%$YEAR%%$MONTH%%$DAY%%$HOUR%"

#local1.*;local1.!notice ?HourlyProxyLog

local1.*;local1.!notice /var/log/swift/proxy.log

local1.notice /var/log/swift/proxy.error

local1.* ~

local2.*;local2.!notice /var/log/swift/storage1.log

local2.notice /var/log/swift/storage1.error

local2.* ~

local3.*;local3.!notice /var/log/swift/storage2.log

local3.notice /var/log/swift/storage2.error

local3.* ~

local4.*;local4.!notice /var/log/swift/storage3.log

local4.notice /var/log/swift/storage3.error

local4.* ~

local5.*;local5.!notice /var/log/swift/storage4.log

local5.notice /var/log/swift/storage4.error

local5.* ~

修改/etc/rsyslog.conf配置文件,新加一行

$PrivDropToGroup adm

重新启动rsyslog服务

mkdir -p /var/log/swift/hourly

chmod -R g+w /var/log/swift

service rsyslog restart

备份一些配置文件

mkdir /etc/swift/bak

mv /etc/swift/account-server.conf /etc/swift/bak

mv /etc/swift/container-server.conf /etc/swift/bak

mv /etc/swift/object-expirer.conf /etc/swift/bak

mv /etc/swift/object-server.conf /etc/swift/bak

修改/etc/swift/proxy-server.conf配置文件

openstack-config --set /etc/swift/proxy-server.conf filter:authtoken admin_tenant_name admin

openstack-config --set /etc/swift/proxy-server.conf filter:authtoken admin_user admin

openstack-config --set /etc/swift/proxy-server.conf filter:authtoken admin_password openstack

openstack-config --set /etc/swift/proxy-server.conf filter:authtoken auth_host 192.168.1.233

openstack-config --set /etc/swift/proxy-server.conf filter:keystone operator_roles admin,SwiftOperator,Member

设置一个随机值

openstack-config --set /etc/swift/swift.conf swift-hash swift_hash_path_suffix $(openssl rand -hex 10)

在/etc/swift/account-server目录下,新建四个配置文件1.conf,2.conf,3.conf,4.conf,这四个文件的内容如下:

======account-server目录下的1.conf的内容==========

[DEFAULT]

devices = /srv/1/node

mount_check = false

disable_fallocate = true

bind_port = 6012

user = swift

log_facility = LOG_LOCAL2

recon_cache_path = /var/cache/swift

eventlet_debug = true

[pipeline:main]

pipeline = recon account-server

[app:account-server]

use = egg:swift#account

[filter:recon]

use = egg:swift#recon

[account-replicator]

vm_test_mode = yes

[account-auditor]

[account-reaper]

======account-server目录下的2.conf的内容=========

[DEFAULT]

devices = /srv/2/node

mount_check = false

disable_fallocate = true

bind_port = 6022

user = swift

log_facility = LOG_LOCAL3

recon_cache_path = /var/cache/swift2

eventlet_debug = true

[pipeline:main]

pipeline = recon account-server

[app:account-server]

use = egg:swift#account

[filter:recon]

use = egg:swift#recon

[account-replicator]

vm_test_mode = yes

[account-auditor]

[account-reaper]

======account-server目录下的3.conf的内容===========

[DEFAULT]

devices = /srv/3/node

mount_check = false

disable_fallocate = true

bind_port = 6032

user = swift

log_facility = LOG_LOCAL4

recon_cache_path = /var/cache/swift3

eventlet_debug = true

[pipeline:main]

pipeline = recon account-server

[app:account-server]

use = egg:swift#account

[filter:recon]

use = egg:swift#recon

[account-replicator]

vm_test_mode = yes

[account-auditor]

[account-reaper]

======account-server目录下的4.conf的内容===========

[DEFAULT]

devices = /srv/4/node

mount_check = false

disable_fallocate = true

bind_port = 6042

user = swift

log_facility = LOG_LOCAL5

recon_cache_path = /var/cache/swift4

eventlet_debug = true

[pipeline:main]

pipeline = recon account-server

[app:account-server]

use = egg:swift#account

[filter:recon]

use = egg:swift#recon

[account-replicator]

vm_test_mode = yes

[account-auditor]

[account-reaper]

在container-server目录下,新建四个文件1.conf,2.conf,3.conf,4.conf,四个文件的内容如下.

cd /etc/swift/container-server

======container-server目录下的1.conf的内容===========

[DEFAULT]

devices = /srv/1/node

mount_check = false

disable_fallocate = true

bind_port = 6011

user = swift

log_facility = LOG_LOCAL2

recon_cache_path = /var/cache/swift

eventlet_debug = true

[pipeline:main]

pipeline = recon container-server

[app:container-server]

use = egg:swift#container

[filter:recon]

use = egg:swift#recon

[container-replicator]

vm_test_mode = yes

[container-updater]

[container-auditor]

[container-sync]

======container-server目录下的2.conf的内容===========

[DEFAULT]

devices = /srv/2/node

mount_check = false

disable_fallocate = true

bind_port = 6021

user = swift

log_facility = LOG_LOCAL3

recon_cache_path = /var/cache/swift2

eventlet_debug = true

[pipeline:main]

pipeline = recon container-server

[app:container-server]

use = egg:swift#container

[filter:recon]

use = egg:swift#recon

[container-replicator]

vm_test_mode = yes

[container-updater]

[container-auditor]

[container-sync]

======container-server目录下的3.conf的内容===========

[DEFAULT]

devices = /srv/3/node

mount_check = false

disable_fallocate = true

bind_port = 6031

user = swift

log_facility = LOG_LOCAL4

recon_cache_path = /var/cache/swift3

eventlet_debug = true

[pipeline:main]

pipeline = recon container-server

[app:container-server]

use = egg:swift#container

[filter:recon]

use = egg:swift#recon

[container-replicator]

vm_test_mode = yes

[container-updater]

[container-auditor]

[container-sync]

======container-server目录下的4.conf的内容===========

[DEFAULT]

devices = /srv/4/node

mount_check = false

disable_fallocate = true

bind_port = 6041

user = swift

log_facility = LOG_LOCAL5

recon_cache_path = /var/cache/swift4

eventlet_debug = true

[pipeline:main]

pipeline = recon container-server

[app:container-server]

use = egg:swift#container

[filter:recon]

use = egg:swift#recon

[container-replicator]

vm_test_mode = yes

[container-updater]

[container-auditor]

[container-sync]

在object-server目录下,新建四个文件1.conf,2.conf,3.conf,4.conf,

cd /etc/swift/object-server

这四个文件内容如下.

======object-server目录下的1.conf的内容===========

[DEFAULT]

devices = /srv/1/node

mount_check = false

disable_fallocate = true

bind_port = 6010

user = swift

log_facility = LOG_LOCAL2

recon_cache_path = /var/cache/swift

eventlet_debug = true

[pipeline:main]

pipeline = recon object-server

[app:object-server]

use = egg:swift#object

[filter:recon]

use = egg:swift#recon

[object-replicator]

vm_test_mode = yes

[object-updater]

[object-auditor]

======object-server目录下的2.conf的内容===========

[DEFAULT]

devices = /srv/2/node

mount_check = false

disable_fallocate = true

bind_port = 6020

user = swift

log_facility = LOG_LOCAL3

recon_cache_path = /var/cache/swift2

eventlet_debug = true

[pipeline:main]

pipeline = recon object-server

[app:object-server]

use = egg:swift#object

[filter:recon]

use = egg:swift#recon

[object-replicator]

vm_test_mode = yes

[object-updater]

[object-auditor]

======object-server目录下的3.conf的内容===========

[DEFAULT]

devices = /srv/3/node

mount_check = false

disable_fallocate = true

bind_port = 6030

user = swift

log_facility = LOG_LOCAL4

recon_cache_path = /var/cache/swift3

eventlet_debug = true

[pipeline:main]

pipeline = recon object-server

[app:object-server]

use = egg:swift#object

[filter:recon]

use = egg:swift#recon

[object-replicator]

vm_test_mode = yes

[object-updater]

[object-auditor]

======object-server目录下的4.conf的内容===========

[DEFAULT]

devices = /srv/4/node

mount_check = false

disable_fallocate = true

bind_port = 6040

user = swift

log_facility = LOG_LOCAL5

recon_cache_path = /var/cache/swift4

eventlet_debug = true

[pipeline:main]

pipeline = recon object-server

[app:object-server]

use = egg:swift#object

[filter:recon]

use = egg:swift#recon

[object-replicator]

vm_test_mode = yes

[object-updater]

[object-auditor]

新建文件/root/remakering.sh,内容如下:

#!/bin/bash

cd /etc/swift

rm -f *.builder *.ring.gz backups/*.builder backups/*.ring.gz

swift-ring-builder object.builder create 10 3 1

swift-ring-builder object.builder add r1z1-192.168.1.233:6010/sdb1 1

swift-ring-builder object.builder add r1z2-192.168.1.233:6020/sdb2 1

swift-ring-builder object.builder add r1z3-192.168.1.233:6030/sdb3 1

swift-ring-builder object.builder add r1z4-192.168.1.233:6040/sdb4 1

swift-ring-builder object.builder rebalance

swift-ring-builder container.builder create 10 3 1

swift-ring-builder container.builder add r1z1-192.168.1.233:6011/sdb1 1

swift-ring-builder container.builder add r1z2-192.168.1.233:6021/sdb2 1

swift-ring-builder container.builder add r1z3-192.168.1.233:6031/sdb3 1

swift-ring-builder container.builder add r1z4-192.168.1.233:6041/sdb4 1

swift-ring-builder container.builder rebalance

swift-ring-builder account.builder create 10 3 1

swift-ring-builder account.builder add r1z1-192.168.1.233:6012/sdb1 1

swift-ring-builder account.builder add r1z2-192.168.1.233:6022/sdb2 1

swift-ring-builder account.builder add r1z3-192.168.1.233:6032/sdb3 1

swift-ring-builder account.builder add r1z4-192.168.1.233:6042/sdb4 1

swift-ring-builder account.builder rebalance

chmod 755 /root/remakering.sh

/root/remakering.sh

修改相关目录属性

chown -R swift:swift /srv/

chown -R swift:swift /etc/swift

chown -R swift:swift /mnt/sdb1

启动memcached

/etc/init.d/memcached start

chkconfig memcached on

启动swift (swift没有配置开机启动)

swift-init all start

开启swift的debug模式 (可选)

在proxy-server.conf 里的 [default]配置里面加上下面2行

log_facility = LOG_LOCAL1

log_level = DEBUG

测试

新建container

swift post testcontainer

上传文件

swift upload testcontainer test.txt

查询一级目录

swift list

查询testcontainer目录下面的文件

swift list testcontainer

Horizon Dashboard安装

导入key

cd /var/www/html/epel-depends

rpm --import RPM-GPG-KEY-EPEL-6

安装dashboard 软件包

yum install -y mod_wsgi httpd mod_ssl memcached python-memcached openstack-dashboard

修改dashboard的配置文件/etc/openstack-dashboard/local_settings

注释如下几行

#CACHES = {

# 'default': {

# 'BACKEND' : 'django.core.cache.backends.locmem.LocMemCache'

# }

#}

打开下面几行的注释

CACHES = {

'default': {

'BACKEND' : 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION' : '127.0.0.1:11211',

}

}

修改如下几行

ALLOWED_HOSTS = ['*']

OPENSTACK_HOST = "192.168.1.233"

设置目录权限。

chown -R apache:apache /etc/openstack-dashboard/ /var/lib/openstack-dashboard/;

设置httpd和memcached服务开机自启动,并启动服务

chkconfig httpd on

chkconfig memcached on

service httpd start

service memcached start

网络节点Network安装 192.168.1.231

防火墙配置

编辑 /etc/sysconfig/iptables 文件,将filter 中添加防火墙规则全部删掉.

重新启动防火墙 /etc/init.d/iptables restart

配置yum源

将epel-depends.repo icehouse.repo rabbitmq.repo rhel65.repo文件,拷贝到/etc/yum.repos.d/目录

导入KEY

rpm --import http://192.168.1.233/epel-depends/RPM-GPG-KEY-EPEL-6

rpm --import http://192.168.1.233/rhel65/RPM-GPG-KEY-redhat-release

rpm --import http://192.168.1.233/rabbitmq/rabbitmq-signing-key-public.asc

rpm --import http://192.168.1.233/rdo-icehouse-b3/RPM-GPG-KEY-RDO-Icehouse

添加eth1网卡,配置如下

cat /etc/sysconfig/network-scripts/ifcfg-eth1

DEVICE=eth1

TYPE=Ethernet

ONBOOT=yes

NM_CONTROLLED=no

BOOTPROTO=static

配置Open vSwitch

chkconfig openvswitch on

service openvswitch start

ovs-vsctl add-br br-int (新建一个默认的桥接设备)

升级iproute和dnsmasq软件包

yum install -y iproute dnsmasq dnsmasq-utils

创建数据库和KeyStone帐号和服务

切换到Controller节点,创建Neutron数据库,数据库名称为neutron

mysql -u root -popenstack

CREATE DATABASE neutron;

GRANT ALL ON neutron.* TO neutron @'%' IDENTIFIED BY 'neutron';

GRANT ALL ON neutron.* TO neutron @'localhost' IDENTIFIED BY 'neutron';

FLUSH PRIVILEGES;

在Controller控制节点上,在KeyStone里面添加neutron服务和endpoint URL

keystone service-create --name neutron --type network --description "Neutron Networking Service"

输出结果如下:

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| description | OpenStack Networking Service |

| id | 58125867309c4c12a62802aa7785ab6f |

| name | neutron |

| type | network |

+-------------+----------------------------------+

keystone endpoint-create --service neutron --publicurl "http://192.168.1.231:9696" --adminurl "http://192.168.1.231:9696" --internalurl "http://192.168.1.231:9696" --region beijing

输出结果如下:

+-------------+----------------------------------+

| Property | Value |

+-------------+----------------------------------+

| adminurl | http://192.168.1.231:9696 |

| id | fd30746eeb9e4f99becea8a0d74e366c |

| internalurl | http://192.168.1.231:9696 |

| publicurl | http://192.168.1.231:9696 |

| region | beijing |

| service_id | 58125867309c4c12a62802aa7785ab6f |

+-------------+----------------------------------+

安装Neutron相关的软件包

在network网络节点上,安装Neutron相关软件包

yum install openstack-neutron python-kombu python-amqplib python-pyudev python-stevedore openstack-utils openstack-neutron-openvswitch openvswitch -y

配置neutron server

修改neutron server的配置文件

openstack-config --set /etc/neutron/neutron.conf DEFAULT auth_strategy keystone

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken auth_host 192.168.1.233

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken admin_tenant_name admin

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken admin_user admin

openstack-config --set /etc/neutron/neutron.conf keystone_authtoken admin_password openstack

openstack-config --set /etc/neutron/neutron.conf DEFAULT rpc_backend neutron.openstack.common.rpc.impl_kombu

openstack-config --set /etc/neutron/neutron.conf DEFAULT rabbit_host 192.168.1.233

openstack-config --set /etc/neutron/neutron.conf DEFAULT core_plugin neutron.plugins.openvswitch.ovs_neutron_plugin.OVSNeutronPluginV2

openstack-config --set /etc/neutron/neutron.conf DEFAULT control_exchange neutron

openstack-config --set /etc/neutron/neutron.conf database connection mysql://neutron:neutron@192.168.1.233/neutron

openstack-config --set /etc/neutron/neutron.conf DEFAULT allow_overlapping_ips True

设置neutron-server服务开机自启动

chkconfig neutron-server on

配置neutron openvswitch agent

本次实验采用OpenVswitch做为plugin来提供网络服务,需要对plugin.ini文件进行关联和修改

ln -s /etc/neutron/plugins/openvswitch/ovs_neutron_plugin.ini /etc/neutron/plugin.ini -f

修改plugin.ini文件

openstack-config --set /etc/neutron/plugin.ini OVS tenant_network_type gre

openstack-config --set /etc/neutron/plugin.ini OVS tunnel_id_ranges 1:1000

openstack-config --set /etc/neutron/plugin.ini OVS enable_tunneling True

openstack-config --set /etc/neutron/plugin.ini OVS local_ip 192.168.1.231

openstack-config --set /etc/neutron/plugin.ini OVS integration_bridge br-int

openstack-config --set /etc/neutron/plugin.ini OVS tunnel_bridge br-tun

openstack-config --set /etc/neutron/plugin.ini SECURITYGROUP firewall_driver neutron.agent.linux.iptables_firewall.OVSHybridIptablesFirewallDriver

设置neutron-openvswitch-agent开机自启动

chkconfig neutron-openvswitch-agent on

配置neutron dhcp agent

修改dhcp agent配置文件 /etc/neutron/dhcp_agent.ini

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT interface_driver neutron.agent.linux.interface.OVSInterfaceDriver

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT dhcp_driver neutron.agent.linux.dhcp.Dnsmasq

openstack-config --set /etc/neutron/dhcp_agent.ini DEFAULT use_namespaces True

设置dhcp agent开机自启动

chkconfig neutron-dhcp-agent on

配置neutron L3 agent

首先添加连接外部网络的网桥br-ex,被设置IP地址

ovs-vsctl add-br br-ex

ovs-vsctl add-port br-ex eth1 (eth0用于做内网, eth1用于外网)

ip addr add 192.168.100.231/24 dev br-ex

ip link set br-ex up

echo "ip addr add 192.168.100.231/24 dev br-ex" >> /etc/rc.local

更新/etc/neutron/l3_agent.ini文件

openstack-config --set /etc/neutron/l3_agent.ini DEFAULT interface_driver neutron.agent.linux.interface.OVSInterfaceDriver

openstack-config --set /etc/neutron/l3_agent.ini DEFAULT user_namespaces True

openstack-config --set /etc/neutron/l3_agent.ini DEFAULT external_network_bridge br-ex

openstack-config --set /etc/neutron/l3_agent.ini DEFAULT enable_metadata_proxy True;

设置 L3 agent服务开机自启动,并启动此服务

chkconfig neutron-l3-agent on

配置neutron metadata服务

neutron-metadata-agent服务需要和L3 Agent运行在同一个服务器上,用于虚拟机和nova metadata服务进行交互。

修改/etc/neutron/metadata_agent.ini配置文件。

先把auth_region 这一行注释掉

然后安装下面的命令修改其他选项

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT auth_region beijing

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT auth_url http://192.168.1.233:35357/v2.0

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT admin_tenant_name admin

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT admin_user admin

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT admin_password openstack

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT nova_metadata_ip 192.168.1.232

openstack-config --set /etc/neutron/metadata_agent.ini DEFAULT metadata_proxy_shared_secret awcloud

设置neutron-metadata服务开机自启动,并启动此服务

chkconfig neutron-metadata-agent on

service neutron-metadata-agent start

初始化数据库

neutron-db-manage --config-file /usr/share/neutron/neutron-dist.conf --config-file /etc/neutron/neutron.conf --config-file /etc/neutron/plugin.ini upgrade head;

修改权限

chown -R neutron:neutron /etc/neutron

检查Neutron相关服务

网络节点服务器上会运行neutron-Server、neutron-openvswitch-agent、neutron-dhcp-agent、neutron-l3-agent、neutron-metadata-agent五个主要服务。

chkconfig --list|grep neutron|grep 3:on

neutron-dhcp-agent 0:off 1:off 2:on 3:on 4:on 5:on 6:off

neutron-l3-agent 0:off 1:off 2:on 3:on 4:on 5:on 6:off

neutron-metadata-agent 0:off 1:off 2:on 3:on 4:on 5:on 6:off

neutron-openvswitch-agent 0:off 1:off 2:on 3:on 4:on 5:on 6:off

neutron-server 0:off 1:off 2:on 3:on 4:on 5:on 6:off

日志文件会存放在/var/log/neutron/目录下,如果服务不能正常启动,通过日志可以获得更多调试信息。

编辑KeyStone操作的环境变量

vi /root/keystone_admin

export OS_USERNAME=admin

export OS_TENANT_NAME=admin

export OS_PASSWORD=openstack

export OS_AUTH_URL=http://192.168.1.233:35357/v2.0/

export PS1='[\u@\h \W(keystone_admin)]\$ '

运行 neutron net-list 如果不报错即可

重新启动neutron服务

service neutron-openvswitch-agent restart

service neutron-dhcp-agent restart

service neutron-l3-agent restart

service neutron-metadata-agent restart

service neutron-server restart

计算节点Compute安装 192.168.1.232

防火墙配置

编辑 /etc/sysconfig/iptables 文件,将filter 中添加防火墙规则全部删掉。

重新启动防火墙 /etc/init.d/iptables restart

配置yum源

将epel-depends.repo icehouse.repo rabbitmq.repo rhel65.repo文件,拷贝到/etc/yum.repos.d/目录

导入KEY

rpm --import http://192.168.1.233/epel-depends/RPM-GPG-KEY-EPEL-6

rpm --import http://192.168.1.233/rhel65/RPM-GPG-KEY-redhat-release

rpm --import http://192.168.1.233/rabbitmq/rabbitmq-signing-key-public.asc

rpm --import http://192.168.1.233/rdo-icehouse-b3/RPM-GPG-KEY-RDO-Icehouse

配置OpenVswitch

chkconfig openvswitch on

service openvswitch start

ovs-vsctl add-br br-int (新建一个默认的桥接设备)

升级iproute和dnsmasq软件包

yum update -y iproute dnsmasq dnsmasq-utils

安装Nova

在计算节点上,安装Nova软件包

yum install -y openstack-nova openstack-utils python-kombu python-amqplib openstack-neutron-openvswitch dnsmasq-utils python-stevedore

切换到Controller控制节点,创建Nova数据库

mysql -u root -popenstack

CREATE DATABASE nova;

GRANT ALL ON nova.* TO 'nova'@'%' IDENTIFIED BY 'nova';

GRANT ALL ON nova.* TO 'nova'@'localhost' IDENTIFIED BY 'nova';

FLUSH PRIVILEGES;

在controller控制节点上,重启数据库服务

service mysqld restart

在controller控制节点上,在Keystone里面创建compute服务和endpoint

keystone service-create --name compute --type compute --description "OpenStack Compute Service"

keystone endpoint-create --service compute --publicurl "http://192.168.1.232:8774/v2/%(tenant_id)s" --adminurl "http://192.168.1.232:8774/v2/%(tenant_id)s" --internalurl "http://192.168.1.232:8774/v2/%(tenant_id)s" --region beijing

在compute计算节点上,修改NOVA的配置文件

openstack-config --set /etc/nova/nova.conf database connection mysql://nova:nova@192.168.1.233/nova;

openstack-config --set /etc/nova/nova.conf DEFAULT rabbit_host 192.168.1.233;

openstack-config --set /etc/nova/nova.conf DEFAULT my_ip 192.168.1.232;

openstack-config --set /etc/nova/nova.conf DEFAULT vncserver_listen 0.0.0.0;

openstack-config --set /etc/nova/nova.conf DEFAULT vnc_enabled True

openstack-config --set /etc/nova/nova.conf DEFAULT vncserver_proxyclient_address 192.168.1.232;

openstack-config --set /etc/nova/nova.conf DEFAULT novncproxy_base_url http://192.168.1.232:6080/vnc_auto.html;

openstack-config --set /etc/nova/nova.conf DEFAULT auth_strategy keystone;

openstack-config --set /etc/nova/nova.conf DEFAULT rpc_backend nova.openstack.common.rpc.impl_kombu;

openstack-config --set /etc/nova/nova.conf DEFAULT glance_host 192.168.1.233;

openstack-config --set /etc/nova/nova.conf DEFAULT api_paste_config /etc/nova/api-paste.ini;

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_host 192.168.1.233;

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_port 5000;

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_protocol http;

openstack-config --set /etc/nova/nova.conf keystone_authtoken auth_version v2.0;

openstack-config --set /etc/nova/nova.conf keystone_authtoken admin_user admin;

openstack-config --set /etc/nova/nova.conf keystone_authtoken admin_tenant_name admin;

openstack-config --set /etc/nova/nova.conf keystone_authtoken admin_password openstack;

openstack-config --set /etc/nova/nova.conf DEFAULT enabled_apis ec2,osapi_compute,metadata;

openstack-config --set /etc/nova/nova.conf DEFAULT firewall_driver nova.virt.firewall.NoopFirewallDriver;

openstack-config --set /etc/nova/nova.conf DEFAULT network_manager nova.network.neutron.manager.NeutronManager;

openstack-config --set /etc/nova/nova.conf DEFAULT service_neutron_metadata_proxy True;

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_metadata_proxy_shared_secret awcloud;

openstack-config --set /etc/nova/nova.conf DEFAULT network_api_class nova.network.neutronv2.api.API;

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_use_dhcp True;

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_url http://192.168.1.231:9696;

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_admin_username admin;

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_admin_password openstack;

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_admin_tenant_name admin;

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_region_name beijing;

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_admin_auth_url http://192.168.1.233:5000/v2.0;

openstack-config --set /etc/nova/nova.conf DEFAULT neutron_auth_strategy keystone;

openstack-config --set /etc/nova/nova.conf DEFAULT security_group_api neutron;

openstack-config --set /etc/nova/nova.conf DEFAULT linuxnet_interface_driver nova.network.linux_net.LinuxOVSInterfaceDriver;

openstack-config --set /etc/nova/nova.conf libvirt vif_driver nova.virt.libvirt.vif.LibvirtGenericVIFDriver;

如果机器cpu不支持全虚拟化,需要修改 /etc/nova.conf

virt_type=kvm ===》 virt_type=qemu

在compute计算节点上,同步nova配置信息

nova-manage db sync

在compute计算节点上,设置nova相关服务开机自启动,并启动相关服务。注意,nova-conductor服务一定要在其他的nova服务之前启动。

chkconfig openstack-nova-consoleauth on

chkconfig openstack-nova-api on

chkconfig openstack-nova-scheduler on

chkconfig openstack-nova-conductor on

chkconfig openstack-nova-compute on

chkconfig openstack-nova-novncproxy on

配置neutron

从network拷贝/etc/neutron 目录到 nova节点

scp -r 192.168.1.231:/etc/neutron /etc

修改 vi /etc/neutron/plugins/openvswitch/ovs_neutron_plugin.ini

local_ip = 192.168.1.232

ln -s /etc/neutron/plugins/openvswitch/ovs_neutron_plugin.ini /etc/neutron/plugin.ini -f

chown -R neutron:neutron /etc/neutron/*

chkconfig neutron-openvswitch-agent on

service neutron-openvswitch-agent start

重启nova

service neutron-openvswitch-agent restart

service openstack-nova-conductor restart

service openstack-nova-api restart

service openstack-nova-scheduler restart

service openstack-nova-compute restart

service openstack-nova-consoleauth restart

service openstack-nova-novncproxy restart

实验

网络架构图

实验环境说明

两个租户TenantA,TenantB。每个租户下各有一个用户,分别为usera,userb。

TenantA,usera拥有一个网络TenantA-Net、一个子网10.0.0.0/24、一个路由器TenantA-R1。

TenantB,userb拥有二个网络TenantB-Net1, TenantB-Net2、二个子网10.0.0.0/24, 10.0.1.0/24、一个路由器TenantB-R1。

TenantA,usera,创建两个虚拟机TenantA_VM1和TenantA_VM2,这两个虚拟机内网地址为10.0.0.0/24网段;

TenantB,userb创建两个虚拟机TenantB_VM1和TenantB_VM2,这两个虚拟机内网地址为10.0.0.0/24网段;

虽然TenantA和TenantB都使用了10.0.0.0/24网络,但是相互之间不冲突。

实验操作步骤

注意一定要保证3台服务器时间一致。

创建外部网络Ext-Net

在network节点之上/root目录,新加一个keystone_admin的文件,内容如下:

more /root/keystone_admin

export OS_USERNAME=admin

export OS_TENANT_NAME=admin

export OS_PASSWORD=openstack

export OS_AUTH_URL=http://192.168.1.233:35357/v2.0/

export PS1='[\u@\h \W(keystone_admin)]\$ '

neutron net-create Ext-Net --provider:network_type local --router:external true

返回结果如下:

+---------------------------+--------------------------------------+

| Field | Value |

+---------------------------+--------------------------------------+

| admin_state_up | True |

| id | 9648359c-6bae-4dad-a25d-9e6805f0d5f3 |

| name | Ext-Net |

| provider:network_type | local |

| provider:physical_network | |

| provider:segmentation_id | |

| router:external | True |

| shared | False |

| status | ACTIVE |

| subnets | |

| tenant_id | a85bdecd1f164f91a4cc0470ec7fc28e |

+---------------------------+--------------------------------------+

创建外部网络对应的子网192.168.100.0/24

neutron subnet-create Ext-Net 192.168.100.0/24

返回结果如下:

+------------------+------------------------------------------------------+

| Field | Value |

+------------------+------------------------------------------------------+

| allocation_pools | {"start": "192.168.100.2", "end": "192.168.100.254"} |

| cidr | 192.168.100.0/24 |

| dns_nameservers | |

| enable_dhcp | True |

| gateway_ip | 192.168.100.1 |

| host_routes | |

| id | 7d6b3fc8-ba36-4ef0-bd64-725738b45a57 |

| ip_version | 4 |

| name | |

| network_id | 9648359c-6bae-4dad-a25d-9e6805f0d5f3 |

| tenant_id | a85bdecd1f164f91a4cc0470ec7fc28e |

+------------------+------------------------------------------------------+

TenantA租户创建一个网络TenantA-Net

neutron --os-tenant-name tenanta --os-username usera --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 net-create TenantA-Net

输出结果如下:

+-----------------+--------------------------------------+

| Field | Value |

+-----------------+--------------------------------------+

| admin_state_up | True |

| id | d67af83f-69c6-404c-ad07-15c490152c9c |

| name | TenantA-Net |

| router:external | False |

| shared | False |

| status | ACTIVE |

| subnets | |

| tenant_id | 6e5cbe8692564c138725b5574bd52e1f |

+-----------------+--------------------------------------+

neutron net-show TenantA-Net (查询TenantA-Net信息)

+---------------------------+--------------------------------------+

| Field | Value |

+---------------------------+--------------------------------------+

| admin_state_up | True |

| id | d67af83f-69c6-404c-ad07-15c490152c9c |

| name | TenantA-Net |

| provider:network_type | gre |

| provider:physical_network | |

| provider:segmentation_id | 1 |

| router:external | False |

| shared | False |

| status | ACTIVE |

| subnets | |

| tenant_id | 6e5cbe8692564c138725b5574bd52e1f |

+---------------------------+--------------------------------------+

TenantA租户创建一个子网10.0.0.0/24

neutron --os-tenant-name tenanta --os-username usera --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 subnet-create TenantA-Net 10.0.0.0/24

输出结果如下:

+------------------+--------------------------------------------+

| Field | Value |

+------------------+--------------------------------------------+

| allocation_pools | {"start": "10.0.0.2", "end": "10.0.0.254"} |

| cidr | 10.0.0.0/24 |

| dns_nameservers | |

| enable_dhcp | True |

| gateway_ip | 10.0.0.1 |

| host_routes | |

| id | f972a035-0954-493b-adb3-c3d279bbcc3f |

| ip_version | 4 |

| name | |

| network_id | d67af83f-69c6-404c-ad07-15c490152c9c |

| tenant_id | 6e5cbe8692564c138725b5574bd52e1f |

+------------------+--------------------------------------------+

TenantA租户创建一个虚拟机TenantA_VM1

需要在计算节点computer上执行nova命令来创建虚拟机。

在/root目录,新家一个keystone_admin的文件,内容如下:

more /root/keystone_admin

export OS_USERNAME=admin

export OS_TENANT_NAME=admin

export OS_PASSWORD=openstack

export OS_AUTH_URL=http://192.168.1.233:35357/v2.0/

export PS1='[\u@\h \W(keystone_admin)]\$ '

注意修改image 和 nic的id

source /root/keystone_admin

nova --os-tenant-name tenanta --os-username usera --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 boot --image 138c0f68-b40a-4778-a076-3eb2d1e025c9 --flavor 1 --nic net-id=d67af83f-69c6-404c-ad07-15c490152c9c TenantA_VM1

输出结果如下:

+-----------------------------+--------------------------------------+

| Property | Value |

+-----------------------------+--------------------------------------+

| status | BUILD |

| updated | 2013-07-17T14:32:52Z |

| OS-EXT-STS:task_state | scheduling |

| key_name | None |

| image | cirros-0.3.0-x86_64 |

| hostId | |

| OS-EXT-STS:vm_state | building |

| flavor | m1.tiny |

| id | 0fa0f84f-2099-411d-acb5-1108c1cee565 |

| security_groups | [{u'name': u'default'}] |

| user_id | 38ef2a5c52ac4083a775442d1efba632 |

| name | TenantA_VM1 |

| adminPass | 8SZVWsUeqmhy |

| tenant_id | 6e5cbe8692564c138725b5574bd52e1f |

| created | 2013-07-17T14:32:52Z |

| OS-DCF:diskConfig | MANUAL |

| metadata | {} |

| accessIPv4 | |

| accessIPv6 | |

| progress | 0 |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-AZ:availability_zone | nova |

| config_drive | |

+-----------------------------+--------------------------------------+

检查虚拟机是否创建成功

nova list --all-tenants

或者

nova --os-tenant-name tenanta --os-username usera --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 list

+--------------------------------------+-------------+--------+----------------------+

| ID | Name | Status | Networks |

+--------------------------------------+-------------+--------+----------------------+

| 0fa0f84f-2099-411d-acb5-1108c1cee565 | TenantA_VM1 | ACTIVE | TenantA-Net=10.0.0.2 |

+--------------------------------------+-------------+--------+----------------------+

TenantA租户创建一个路由器TenantA-R1

neutron --os-tenant-name tenanta --os-username usera --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 router-create TenantA-R1

输出结果如下:

Created a new router:

+-----------------------+--------------------------------------+

| Field | Value |

+-----------------------+--------------------------------------+

| admin_state_up | True |

| external_gateway_info | |

| id | f1a1dad9-671a-4fef-88d5-5faec11e9081 |

| name | TenantA-R1 |

| status | ACTIVE |

| tenant_id | 6e5cbe8692564c138725b5574bd52e1f |

+-----------------------+--------------------------------------+

TenantA租户关联路由器和子网10.0.0.0/24

把TenantA的子网subnet10.0.0.0/24, TenantA-Net 的id f972a035-0954-493b-adb3-c3d279bbcc3f 和路由器关联。

查询子网ID:

neutron subnet-list

修改id为 子网TenantA-Net的id

neutron --os-tenant-name tenanta --os-username usera --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 router-interface-add TenantA-R1 f972a035-0954-493b-adb3-c3d279bbcc3f

TenantA租户路由器和外网关联

设置网关,把TenantA-R1和Ext-Net外部网络关联起来。

neutron router-gateway-set TenantA-R1 Ext-Net (这个命令是以admin的身份执行的,因为外部网络是属于admin用户的创建的)

输出结果如下:

Set gateway for router TenantA-R1

TenantA租户给TenantA_VM1绑定浮动IP

TenantA租户申请一个floating IP

neutron --os-tenant-name tenanta --os-username usera --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 floatingip-create Ext-Net

Created a new floatingip:

+---------------------+--------------------------------------+

| Field | Value |

+---------------------+--------------------------------------+

| fixed_ip_address | |

| floating_ip_address | 192.168.100.3 |

| floating_network_id | 9648359c-6bae-4dad-a25d-9e6805f0d5f3 |

| id | 8740bc59-343d-4f95-88f7-dfd0029fbed5 |

| port_id | |

| router_id | |

| tenant_id | 6e5cbe8692564c138725b5574bd52e1f |

+---------------------+--------------------------------------+

TenantA租户给TenantA_VM1绑定浮动IP

通过nova list找到虚拟机的ID,然后再通过这个id找到对应的端口port id。

nova --os-tenant-name tenanta --os-username usera --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 list

+--------------------------------------+-------------+--------+----------------------+

| ID | Name | Status | Networks |

+--------------------------------------+-------------+--------+----------------------+

| 0fa0f84f-2099-411d-acb5-1108c1cee565 | TenantA_VM1 | ACTIVE | TenantA-Net=10.0.0.2 |

+--------------------------------------+-------------+--------+----------------------+

neutron --os-tenant-name tenanta --os-username usera --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 port-list --device_id 0fa0f84f-2099-411d-acb5-1108c1cee565

+--------------------------------------+------+-------------------+---------------------------------------------------------------------------------+

| id | name | mac_address | fixed_ips |

+--------------------------------------+------+-------------------+---------------------------------------------------------------------------------+

| 9d1e6ff3-1e52-45c9-9baf-e9b789747f7a | | fa:16:3e:7b:79:fd | {"subnet_id": "f972a035-0954-493b-adb3-c3d279bbcc3f", "ip_address": "10.0.0.2"} |

+--------------------------------------+------+-------------------+---------------------------------------------------------------------------------+

关联浮动ip 192.168.100.3 地址给虚拟机 10.0.0.2

neutron --os-tenant-name tenanta --os-username usera --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 floatingip-associate 8740bc59-343d-4f95-88f7-dfd0029fbed5 9d1e6ff3-1e52-45c9-9baf-e9b789747f7a

Associated floatingip 8740bc59-343d-4f95-88f7-dfd0029fbed5

TenantA租户创建第二个虚拟机TenantA_VM2

在计算节点上运行nova命令来创建新的虚拟机。

nova --os-tenant-name tenanta --os-username usera --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 boot --image 138c0f68-b40a-4778-a076-3eb2d1e025c9 --flavor 1 --nic net-id=d67af83f-69c6-404c-ad07-15c490152c9c TenantA_VM2

+-----------------------------+--------------------------------------+

| Property | Value |

+-----------------------------+--------------------------------------+

| status | BUILD |

| updated | 2013-07-18T12:58:13Z |

| OS-EXT-STS:task_state | scheduling |

| key_name | None |

| image | cirros-0.3.0-x86_64 |

| hostId | |

| OS-EXT-STS:vm_state | building |

| flavor | m1.tiny |

| id | 5086b6b2-6ac9-491a-872e-02e2957475f6 |

| security_groups | [{u'name': u'default'}] |

| user_id | 38ef2a5c52ac4083a775442d1efba632 |

| name | TenantA_VM2 |

| adminPass | 7kT4pfUtieuR |

| tenant_id | 6e5cbe8692564c138725b5574bd52e1f |

| created | 2013-07-18T12:58:13Z |

| OS-DCF:diskConfig | MANUAL |

| metadata | {} |

| accessIPv4 | |

| accessIPv6 | |

| progress | 0 |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-AZ:availability_zone | nova |

| config_drive | |

+-----------------------------+--------------------------------------+

TenantA租户申请第二个floating IP

neutron --os-tenant-name tenanta --os-username usera --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 floatingip-create Ext-Net

Created a new floatingip:

+---------------------+--------------------------------------+

| Field | Value |

+---------------------+--------------------------------------+

| fixed_ip_address | |

| floating_ip_address | 192.168.100.5 |

| floating_network_id | 9648359c-6bae-4dad-a25d-9e6805f0d5f3 |

| id | 96376ff9-8cb0-464d-9ebe-f084d8c5be8f |

| port_id | |

| router_id | |

| tenant_id | 6e5cbe8692564c138725b5574bd52e1f |

+---------------------+--------------------------------------+

TenantA租户给TenantA_VM2绑定浮动IP

把浮动IP 192.168.100.5 和 第二个虚拟机 TenantA_VM2绑定。

先找到ip 10.0.0.4对应的 device_id:

neutron --os-tenant-name tenanta --os-username usera --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 port-list

+--------------------------------------+------+-------------------+---------------------------------------------------------------------------------+

| id | name | mac_address | fixed_ips |

+--------------------------------------+------+-------------------+---------------------------------------------------------------------------------+

| 7d839d6b-6da9-459f-9cb3-de64b56f91e0 | | fa:16:3e:1e:37:5e | {"subnet_id": "f972a035-0954-493b-adb3-c3d279bbcc3f", "ip_address": "10.0.0.4"} |

| 9d1e6ff3-1e52-45c9-9baf-e9b789747f7a | | fa:16:3e:7b:79:fd | {"subnet_id": "f972a035-0954-493b-adb3-c3d279bbcc3f", "ip_address": "10.0.0.2"} |

| a8e7c0db-59a2-4e38-82db-1ae01bb0c89f | | fa:16:3e:c9:d6:18 | {"subnet_id": "f972a035-0954-493b-adb3-c3d279bbcc3f", "ip_address": "10.0.0.1"} |

| c6047f08-63c5-42e8-8b08-480ea0a6ab78 | | fa:16:3e:0b:90:bd | {"subnet_id": "f972a035-0954-493b-adb3-c3d279bbcc3f", "ip_address": "10.0.0.3"} |

+--------------------------------------+------+-------------------+---------------------------------------------------------------------------------+

绑定192.168.100.5 和 10.0.0.4,

neutron --os-tenant-name tenanta --os-username usera --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 floatingip-associate 96376ff9-8cb0-464d-9ebe-f084d8c5be8f 7d839d6b-6da9-459f-9cb3-de64b56f91e0

Associated floatingip 96376ff9-8cb0-464d-9ebe-f084d8c5be8f

TenantB租户创建第一个子

TenantB创建第一个网络TenantB-Net1

neutron --os-tenant-name tenantb --os-username userb --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 net-create TenantB-Net1

Created a new network:

+-----------------+--------------------------------------+

| Field | Value |

+-----------------+--------------------------------------+

| admin_state_up | True |

| id | 877c6f61-3761-4f36-8d35-35c44715d51f |

| name | TenantB-Net1 |

| router:external | False |

| shared | False |

| status | ACTIVE |

| subnets | |

| tenant_id | 0740fb46cd614359ab384ee9763df1f7 |

+-----------------+--------------------------------------+

TenantB租户创建第一个子网10.0.0.0/24

neutron --os-tenant-name tenantb --os-username userb --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 subnet-create TenantB-Net1 10.0.0.0/24 --name TenantB-Subnet1

Created a new subnet:

+------------------+--------------------------------------------+

| Field | Value |

+------------------+--------------------------------------------+

| allocation_pools | {"start": "10.0.0.2", "end": "10.0.0.254"} |

| cidr | 10.0.0.0/24 |

| dns_nameservers | |

| enable_dhcp | True |

| gateway_ip | 10.0.0.1 |

| host_routes | |

| id | a7b580e5-e6da-49d8-894d-f96f69e7c3d1 |

| ip_version | 4 |

| name | TenantB-Subnet1 |

| network_id | 877c6f61-3761-4f36-8d35-35c44715d51f |

| tenant_id | 0740fb46cd614359ab384ee9763df1f7 |

+------------------+--------------------------------------------+

TenantB租户创建第二个网络TenantB-Net2

neutron --os-tenant-name tenantb --os-username userb --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 net-create TenantB-Net2

Created a new network:

+-----------------+--------------------------------------+

| Field | Value |

+-----------------+--------------------------------------+

| admin_state_up | True |

| id | 3bb71f46-9c75-4653-aa87-9a9c189ea37a |

| name | TenantB-Net2 |

| router:external | False |

| shared | False |

| status | ACTIVE |

| subnets | |

| tenant_id | 0740fb46cd614359ab384ee9763df1f7 |

+-----------------+--------------------------------------+

TenantB租户创建第二个子网10.0.1.0/24

neutron --os-tenant-name tenantb --os-username userb --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 subnet-create TenantB-Net2 10.0.1.0/24 --name TenantB-Subnet2

Created a new subnet:

+------------------+--------------------------------------------+

| Field | Value |

+------------------+--------------------------------------------+

| allocation_pools | {"start": "10.0.1.2", "end": "10.0.1.254"} |

| cidr | 10.0.1.0/24 |

| dns_nameservers | |

| enable_dhcp | True |

| gateway_ip | 10.0.1.1 |

| host_routes | |

| id | 3f66a482-42ef-475a-92ac-b7224d4d725b |

| ip_version | 4 |

| name | TenantB-Subnet2 |

| network_id | 3bb71f46-9c75-4653-aa87-9a9c189ea37a |

| tenant_id | 0740fb46cd614359ab384ee9763df1f7 |

+------------------+--------------------------------------------+

TenantB租户创建虚拟机TenentB_VM1

注意nova命令都是在compute节点上执行.

先查看TenantB-Net1的网络ID:

neutron net-show TenantB-Net1

+---------------------------+--------------------------------------+

| Field | Value |

+---------------------------+--------------------------------------+

| admin_state_up | True |

| id | 877c6f61-3761-4f36-8d35-35c44715d51f |

| name | TenantB-Net1 |

| provider:network_type | gre |

| provider:physical_network | |

| provider:segmentation_id | 2 |

| router:external | False |

| shared | False |

| status | ACTIVE |

| subnets | a7b580e5-e6da-49d8-894d-f96f69e7c3d1 |

| tenant_id | 0740fb46cd614359ab384ee9763df1f7 |

+---------------------------+--------------------------------------+

然后到compute节点上启动一个虚拟机:

nova --os-tenant-name tenantb --os-username userb --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 boot --image 138c0f68-b40a-4778-a076-3eb2d1e025c9 --flavor 1 --nic net-id=877c6f61-3761-4f36-8d35-35c44715d51f TenantB_VM1

+-----------------------------+--------------------------------------+

| Property | Value |

+-----------------------------+--------------------------------------+

| status | BUILD |

| updated | 2013-07-18T13:24:23Z |

| OS-EXT-STS:task_state | scheduling |

| key_name | None |

| image | cirros-0.3.0-x86_64 |

| hostId | |

| OS-EXT-STS:vm_state | building |

| flavor | m1.tiny |

| id | 5ed1b956-a963-4e0a-890a-cba821c16aa8 |

| security_groups | [{u'name': u'default'}] |

| user_id | f13e1e829b3e4151ad2465f32218871d |

| name | TenantB_VM1 |

| adminPass | CavM6uWvjw7X |

| tenant_id | 0740fb46cd614359ab384ee9763df1f7 |

| created | 2013-07-18T13:24:23Z |

| OS-DCF:diskConfig | MANUAL |

| metadata | {} |

| accessIPv4 | |

| accessIPv6 | |

| progress | 0 |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-AZ:availability_zone | nova |

| config_drive | |

+-----------------------------+--------------------------------------+

关联路由器和两个子

TenantB租户创建一个路由器TenantB-R1

neutron --os-tenant-name tenantb --os-username userb --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 router-create TenantB-R1

Created a new router:

+-----------------------+--------------------------------------+

| Field | Value |

+-----------------------+--------------------------------------+

| admin_state_up | True |

| external_gateway_info | |

| id | eaee5194-1363-4106-b961-ab97412cb9ea |

| name | TenantB-R1 |

| status | ACTIVE |

| tenant_id | 0740fb46cd614359ab384ee9763df1f7 |

+-----------------------+--------------------------------------+

TenantB租户关联路由器和两个子网

先查看子网ID

neutron --os-tenant-name tenantb --os-username userb --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 subnet-list

+--------------------------------------+-----------------+-------------+--------------------------------------------+

| id | name | cidr | allocation_pools |

+--------------------------------------+-----------------+-------------+--------------------------------------------+

| 3f66a482-42ef-475a-92ac-b7224d4d725b | TenantB-Subnet2 | 10.0.1.0/24 | {"start": "10.0.1.2", "end": "10.0.1.254"} |

| a7b580e5-e6da-49d8-894d-f96f69e7c3d1 | TenantB-Subnet1 | 10.0.0.0/24 | {"start": "10.0.0.2", "end": "10.0.0.254"} |

+--------------------------------------+-----------------+-------------+--------------------------------------------

关联子网10.0.0.0/24

neutron --os-tenant-name tenantb --os-username userb --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 router-interface-add TenantB-R1 a7b580e5-e6da-49d8-894d-f96f69e7c3d1

Added interface to router TenantB-R1

关联子网10.0.1.0/24

neutron --os-tenant-name tenantb --os-username userb --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 router-interface-add TenantB-R1 3f66a482-42ef-475a-92ac-b7224d4d725b

Added interface to router TenantB-R1

TenantB租户路由器和外网关联

neutron router-gateway-set TenantB-R1 Ext-Net

返回结果:

Set gateway for router TenantB-R1

TenantB租户申请一个floating IP

neutron --os-tenant-name tenantb --os-username userb --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 floatingip-create Ext-Net

Created a new floatingip:

+---------------------+--------------------------------------+

| Field | Value |

+---------------------+--------------------------------------+

| fixed_ip_address | |

| floating_ip_address | 192.168.100.7 |

| floating_network_id | 9648359c-6bae-4dad-a25d-9e6805f0d5f3 |

| id | 029c7691-f050-461f-921b-38954d570b6b |

| port_id | |

| router_id | |

| tenant_id | 0740fb46cd614359ab384ee9763df1f7 |

+---------------------+--------------------------------------+

TenantB租户TenantB_VM1绑定浮动IP

获得虚拟机的ID:

neutron --os-tenant-name tenantb --os-username userb --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 port-list

+--------------------------------------+------+-------------------+---------------------------------------------------------------------------------+

| id | name | mac_address | fixed_ips |

+--------------------------------------+------+-------------------+---------------------------------------------------------------------------------+

| 7b1678d7-4cf3-476f-b85c-738ecf8420fc | | fa:16:3e:d2:cd:e6 | {"subnet_id": "3f66a482-42ef-475a-92ac-b7224d4d725b", "ip_address": "10.0.1.1"} |

| aec9ead5-83ae-4bb2-a1c9-501b008670c8 | | fa:16:3e:93:67:7b | {"subnet_id": "a7b580e5-e6da-49d8-894d-f96f69e7c3d1", "ip_address": "10.0.0.2"} |

| f9cf7890-7bc3-4950-9f4f-659ebf57ac8c | | fa:16:3e:8e:07:aa | {"subnet_id": "a7b580e5-e6da-49d8-894d-f96f69e7c3d1", "ip_address": "10.0.0.3"} |

+--------------------------------------+------+-------------------+---------------------------------------------------------------------------------+

找到10.0.0.2对应的id,然后进行绑定

neutron --os-tenant-name tenantb --os-username userb --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 floatingip-associate 029c7691-f050-461f-921b-38954d570b6b aec9ead5-83ae-4bb2-a1c9-501b008670c8

TenantB租户创建虚拟机TenantB_VM2

TenantB启动第二个虚拟机,还是关联到TenantB-Net1,即10.0.0.0/24网段。nova命令需要在计算节点上执行。

nova --os-tenant-name tenantb --os-username userb --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 boot --image 138c0f68-b40a-4778-a076-3eb2d1e025c9 --flavor 1 --nic net-id=877c6f61-3761-4f36-8d35-35c44715d51f TenantB_VM2

+-----------------------------+--------------------------------------+

| Property | Value |

+-----------------------------+--------------------------------------+

| status | BUILD |

| updated | 2013-07-18T13:55:17Z |

| OS-EXT-STS:task_state | scheduling |

| key_name | None |

| image | cirros-0.3.0-x86_64 |

| hostId | |

| OS-EXT-STS:vm_state | building |

| flavor | m1.tiny |

| id | f34e3130-00eb-4cc7-89bf-66e93d54b522 |

| security_groups | [{u'name': u'default'}] |

| user_id | f13e1e829b3e4151ad2465f32218871d |

| name | TenantB_VM2 |

| adminPass | VvqVVaBw9i6s |

| tenant_id | 0740fb46cd614359ab384ee9763df1f7 |

| created | 2013-07-18T13:55:17Z |

| OS-DCF:diskConfig | MANUAL |

| metadata | {} |

| accessIPv4 | |

| accessIPv6 | |

| progress | 0 |

| OS-EXT-STS:power_state | 0 |

| OS-EXT-AZ:availability_zone | nova |

| config_drive | |

+-----------------------------+--------------------------------------+

TenantB租户申请第二个floating IP

neutron --os-tenant-name tenantb --os-username userb --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 floatingip-create Ext-Net

Created a new floatingip:

+---------------------+--------------------------------------+

| Field | Value |

+---------------------+--------------------------------------+

| fixed_ip_address | |

| floating_ip_address | 192.168.100.8 |

| floating_network_id | 9648359c-6bae-4dad-a25d-9e6805f0d5f3 |

| id | 0e6259f5-bb51-46bb-8839-f5e499b6619a |

| port_id | |

| router_id | |

| tenant_id | 0740fb46cd614359ab384ee9763df1f7 |

+---------------------+--------------------------------------+

TenantB租户TenantB_VM2绑定浮动IP

neutron --os-tenant-name tenantb --os-username userb --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 port-list

+--------------------------------------+------+-------------------+---------------------------------------------------------------------------------+

| id | name | mac_address | fixed_ips |

+--------------------------------------+------+-------------------+---------------------------------------------------------------------------------+

| 58b70cfb-45fa-4387-8633-39f03ad3690e | | fa:16:3e:12:ff:3a | {"subnet_id": "a7b580e5-e6da-49d8-894d-f96f69e7c3d1", "ip_address": "10.0.0.1"} |

| 7b1678d7-4cf3-476f-b85c-738ecf8420fc | | fa:16:3e:d2:cd:e6 | {"subnet_id": "3f66a482-42ef-475a-92ac-b7224d4d725b", "ip_address": "10.0.1.1"} |

| aec9ead5-83ae-4bb2-a1c9-501b008670c8 | | fa:16:3e:93:67:7b | {"subnet_id": "a7b580e5-e6da-49d8-894d-f96f69e7c3d1", "ip_address": "10.0.0.2"} |

| eb5f0941-ae2d-4d64-b573-023310c7fa2d | | fa:16:3e:6d:a9:56 | {"subnet_id": "a7b580e5-e6da-49d8-894d-f96f69e7c3d1", "ip_address": "10.0.0.4"} |

| f9cf7890-7bc3-4950-9f4f-659ebf57ac8c | | fa:16:3e:8e:07:aa | {"subnet_id": "a7b580e5-e6da-49d8-894d-f96f69e7c3d1", "ip_address": "10.0.0.3"} |

+--------------------------------------+------+-------------------+---------------------------------------------------------------------------------+

neutron --os-tenant-name tenantb --os-username userb --os-password openstack --os-auth-url=http://192.168.1.233:5000/v2.0 floatingip-associate 0e6259f5-bb51-46bb-8839-f5e499b6619a eb5f0941-ae2d-4d64-b573-023310c7fa2d

提示:

Associated floatingip 0e6259f5-bb51-46bb-8839-f5e499b6619a

OpenStack_Icehouse_for_RHEL65.zip

(291.45 KB, 下载次数: 178, 售价: 5 云币)

OpenStack_Icehouse_for_RHEL65.zip

(291.45 KB, 下载次数: 178, 售价: 5 云币)

|

|

/2

/2