bob007 发表于 2015-5-1 10:00 解决了,是/etc/hosts文件出了问题,感谢啊。 |

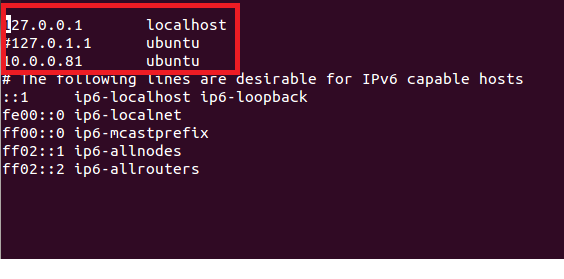

hapjin 发表于 2015-5-1 08:43 确保所有进程都在,不会存在僵死进程解决办法: 重启hadoop 查看hosts,确保注释掉127.0.1.1  更多原因看 hadoop2.7 运行wordcount |

|

我发现是datanode无法连接到namenode上???这个怎么解决呢? DataNode的日志信息如下: DataNode: Problem connecting to server: controller/192.168.1.186:9000 2015-05-01 08:39:12,273 INFO org.apache.hadoop.ipc.Client: Retrying connect to server: controller/192.168.1.186:9000. Already tried 0 time(s); retry policy is RetryUpToMaximumCountWithFixedSleep(maxRetries=10, sleepTime=1000 MILLISECONDS) |

/2

/2