最近在做一些初步的性能调优,对于一部分数据丢失,是可以容忍的。

所以我打算对hbase做一个写数据不写hlog的设置操作。

不过现在0.98版本,put.setWriteToWAL(false);是马上就要淘汰的方法了。

现在新的方法是?

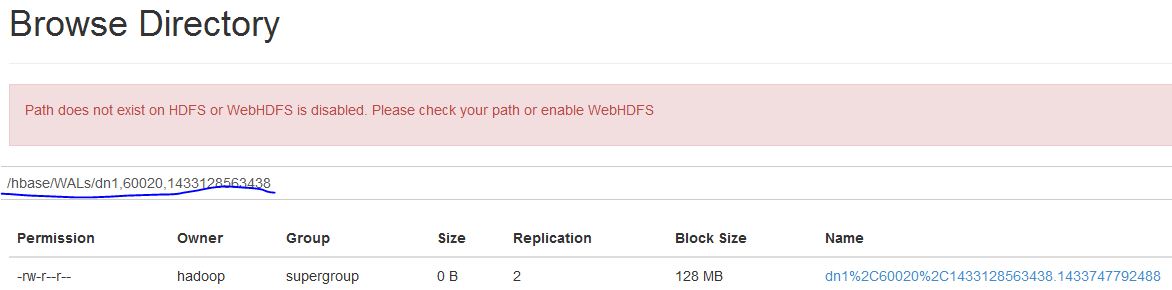

还有,我在HDFS上找报这个信息,后面我去master的log日志看,

报:

hbase目录下有OldWALs和WALs两个目录,这个log清理是清理哪个? 两个都给清理了?

2015-06-07 23:07:05,165 DEBUG [FifoRpcScheduler.handler1-thread-4] util.FSTableDescriptors: Exception during readTableDecriptor. Current table name = _LOCAL_IDX_INTEGRATE

org.apache.hadoop.hbase.TableInfoMissingException: No table descriptor file under hdfs://masters/hbase/data/default/_LOCAL_IDX_INTEGRATE

at org.apache.hadoop.hbase.util.FSTableDescriptors.getTableDescriptorFromFs(FSTableDescriptors.java:510)

at org.apache.hadoop.hbase.util.FSTableDescriptors.getTableDescriptorFromFs(FSTableDescriptors.java:487)

at org.apache.hadoop.hbase.util.FSTableDescriptors.get(FSTableDescriptors.java:173)

at org.apache.hadoop.hbase.master.HMaster.getTableDescriptors(HMaster.java:2821)

at org.apache.hadoop.hbase.protobuf.generated.MasterProtos$MasterService$2.callBlockingMethod(MasterProtos.java:41447)

at org.apache.hadoop.hbase.ipc.RpcServer.call(RpcServer.java:2093)

at org.apache.hadoop.hbase.ipc.CallRunner.run(CallRunner.java:101)

at org.apache.hadoop.hbase.ipc.FifoRpcScheduler$1.run(FifoRpcScheduler.java:74)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:471)

at java.util.concurrent.FutureTask.run(FutureTask.java:262)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615)

at java.lang.Thread.run(Thread.java:745)

2015-06-07 23:26:47,935 DEBUG [master:nn1:60000.oldLogCleaner] master.ReplicationLogCleaner: Didn't find this log in ZK, deleting: dn1%2C60020%2C1433128563438.1433726190963

2015-06-07 23:26:47,938 DEBUG [master:nn1:60000.oldLogCleaner] master.ReplicationLogCleaner: Didn't find this log in ZK, deleting: dn2%2C60020%2C1433128545540.1433729773837.meta

2015-06-07 23:26:47,938 DEBUG [master:nn1:60000.oldLogCleaner] master.ReplicationLogCleaner: Didn't find this log in ZK, deleting: dn3%2C60020%2C1433128563674.1433729790761

2015-06-08 00:26:47,933 DEBUG [master:nn1:60000.oldLogCleaner] master.ReplicationLogCleaner: Didn't find this log in ZK, deleting: dn1%2C60020%2C1433128563438.1433729791187

2015-06-08 00:26:47,934 DEBUG [master:nn1:60000.oldLogCleaner] master.ReplicationLogCleaner: Didn't find this log in ZK, deleting: dn1%2C60020%2C1433128563438.1433733391546

2015-06-08 00:26:47,934 DEBUG [master:nn1:60000.oldLogCleaner] master.ReplicationLogCleaner: Didn't find this log in ZK, deleting: dn2%2C60020%2C1433128545540.1433729773830

2015-06-08 00:26:47,934 DEBUG [master:nn1:60000.oldLogCleaner] master.ReplicationLogCleaner: Didn't find this log in ZK, deleting: dn2%2C60020%2C1433128545540.1433733374120.meta

|

|

/2

/2