本帖最后由 mvs2008 于 2015-12-9 18:34 编辑

自己写了个hive的UDF来实现Oracle中decode功能。

[mw_shl_code=java,true]package com.danny;

import org.apache.hadoop.hive.ql.exec.UDF;

import org.apache.hadoop.io.Text;

public class UDFDecode extends UDF {

public String evaluate(Object... args) {

String org_val, result = null;

if (args.length == 0) {

result= null;

} else {

if (args.length % 2 == 1) {

result= "No. of Parameters must be even";

} else {

for (int i = 0;i < args.length; i++) {

org_val = args[0].toString();

if (i % 2 == 0 && i != 0 && org_val == args[i - 1].toString()

) {

result= args.toString();

}

if(result==null)

{

result= args[args.length-1].toString();

}

}

}

}

return result;

}

}

[/mw_shl_code]

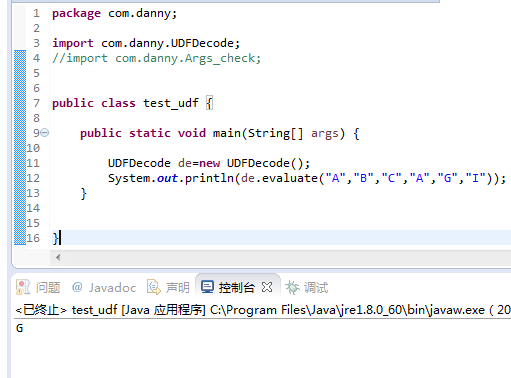

在eclipse中测试通过的。

但是上传到HIVE中运行的结果却不一样,这是为什么呢?

hive> select decode('a','a','b','c') from dual;

Total MapReduce jobs = 1

Launching Job 1 out of 1

Number of reduce tasks is set to 0 since there's no reduce operator

Starting Job = job_1443597173524_0064, Tracking URL = http://master.hadoop:8088/proxy/application_1443597173524_0064/

Kill Command = /home/hadoop/hadoop-2.6.0/bin/hadoop job -kill job_1443597173524_0064

Hadoop job information for Stage-1: number of mappers: 1; number of reducers: 0

2015-12-09 02:19:47,385 Stage-1 map = 0%, reduce = 0%

2015-12-09 02:19:53,381 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.38 sec

2015-12-09 02:19:54,456 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.38 sec

2015-12-09 02:19:55,492 Stage-1 map = 100%, reduce = 0%, Cumulative CPU 1.38 sec

MapReduce Total cumulative CPU time: 1 seconds 380 msec

Ended Job = job_1443597173524_0064

MapReduce Jobs Launched:

Job 0: Map: 1 Cumulative CPU: 1.38 sec HDFS Read: 215 HDFS Write: 2 SUCCESS

Total MapReduce CPU Time Spent: 1 seconds 380 msec

OK

c

Time taken: 13.883 seconds, Fetched: 1 row(s)

|

|

/2

/2