最开始发现这个问题是执行Hive语句之后就挂在那里不动,后来发现原来是仅仅执行一个简单的MapReduce也会出现相同现象

我的集群是3台安装cdh5.4.8-hadoop2.6.0的CentOS6.5,不存在版本不兼容的情况,之前MapReduce、Hive、Sqoop都一直好好的,突然就不行了,记忆中在出问题前没有更改过什么配置(在出了问题后改了一下yarn中关于任务内存的设置),一直都只在Hive里面写HQL,还用crontab设置过定时执行脚本。

集群中各台机子内存够用(8G,可用空间6G多),磁盘快用光了(三台机都用了大约91%),但是出事之前剩余95%左右的时候也能执行任务的(怀疑过是不是磁盘快用光引起的)

执行hadoop自带的MR例子(也执行过Hive,Sqoop,自己写的和集群完全版本匹配的WordCount,效果都是如下)

hadoop jar /root/hadoop-mapreduce-examples-2.2.0.jar pi 1 1

结果如下

[mw_shl_code=java,true]Number of Maps = 1

Samples per Map = 1

Wrote input for Map #0

Starting Job

16/06/14 16:57:50 INFO client.RMProxy: Connecting to ResourceManager at DX3-1/172.31.7.78:8032

16/06/14 16:57:50 INFO input.FileInputFormat: Total input paths to process : 1

16/06/14 16:57:50 INFO mapreduce.JobSubmitter: number of splits:1

16/06/14 16:57:50 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1465868977833_0029

16/06/14 16:57:50 INFO impl.YarnClientImpl: Submitted application application_1465868977833_0029

16/06/14 16:57:50 INFO mapreduce.Job: The url to track the job: http://DX3-1:8088/proxy/application_1465868977833_0029/

16/06/14 16:57:50 INFO mapreduce.Job: Running job: job_1465868977833_0029[/mw_shl_code]

就一直挂在这里不再继续,查看ResourceManager的相应日志如下

[mw_shl_code=java,true]2016-06-14 16:57:50,250 INFO org.apache.hadoop.yarn.server.resourcemanager.ClientRMService: Allocated new applicationId: 29

2016-06-14 16:57:50,878 INFO org.apache.hadoop.yarn.server.resourcemanager.ClientRMService: Application with id 29 submitted by user root

2016-06-14 16:57:50,878 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl: Storing application with id application_1465868977833_0029

2016-06-14 16:57:50,878 INFO org.apache.hadoop.yarn.server.resourcemanager.RMAuditLogger: USER=root IP=172.31.7.78 OPERATION=Submit Application Request TARGET=ClientRMService RESULT=SUCCESS APPID=application_1465868977833_0029

2016-06-14 16:57:50,878 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl: application_1465868977833_0029 State change from NEW to NEW_SAVING

2016-06-14 16:57:50,878 INFO org.apache.hadoop.yarn.server.resourcemanager.recovery.RMStateStore: Storing info for app: application_1465868977833_0029

2016-06-14 16:57:50,879 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl: application_1465868977833_0029 State change from NEW_SAVING to SUBMITTED

2016-06-14 16:57:50,879 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler: Accepted application application_1465868977833_0029 from user: root, in queue: default, currently num of applications: 19

2016-06-14 16:57:50,879 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.RMAppImpl: application_1465868977833_0029 State change from SUBMITTED to ACCEPTED

2016-06-14 16:57:50,879 INFO org.apache.hadoop.yarn.server.resourcemanager.ApplicationMasterService: Registering app attempt : appattempt_1465868977833_0029_000001

2016-06-14 16:57:50,879 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1465868977833_0029_000001 State change from NEW to SUBMITTED

2016-06-14 16:57:50,879 INFO org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler: Added Application Attempt appattempt_1465868977833_0029_000001 to scheduler from user: root

2016-06-14 16:57:50,880 INFO org.apache.hadoop.yarn.server.resourcemanager.rmapp.attempt.RMAppAttemptImpl: appattempt_1465868977833_0029_000001 State change from SUBMITTED to SCHEDULED[/mw_shl_code]

各个节点的NodeManager都没有相应的日志

执行export HADOOP_ROOT_LOGGER=DEBUG,console之后,打印所有的DEBUG消息后发现,消息中没有exception,但是一直会出现形如下面的消息,而且一直这样一条条的刷,好像是连不上ResourceManager的样子

[mw_shl_code=java,true]16/06/14 16:50:07 DEBUG security.UserGroupInformation: PrivilegedAction as:root (auth:SIMPLE) from:org.apache.hadoop.mapreduce.Job.updateStatus(Job.java:322)

16/06/14 16:50:07 DEBUG ipc.Client: IPC Client (453190819) connection to DX3-1/172.31.7.78:8032 from root sending #52

16/06/14 16:50:07 DEBUG ipc.Client: IPC Client (453190819) connection to DX3-1/172.31.7.78:8032 from root got value #52

16/06/14 16:50:07 DEBUG ipc.ProtobufRpcEngine: Call: getApplicationReport took 1ms

16/06/14 16:50:07 DEBUG security.UserGroupInformation: PrivilegedAction as:root (auth:SIMPLE) from:org.apache.hadoop.mapreduce.Job.updateStatus(Job.java:322)

16/06/14 16:50:07 DEBUG ipc.Client: IPC Client (453190819) connection to DX3-1/172.31.7.78:8032 from root sending #53

16/06/14 16:50:07 DEBUG ipc.Client: IPC Client (453190819) connection to DX3-1/172.31.7.78:8032 from root got value #53

16/06/14 16:50:07 DEBUG ipc.ProtobufRpcEngine: Call: getApplicationReport took 1ms

[/mw_shl_code]

各个节点能够ping同,即便是在ResourceManager所在的机子上运行,也会出同样的错误。

我发现出问题之后,新执行的任务在HDFS中/tmp/yarn-log/root/logs下就不再产生日志了,执行yarn logs -applicationId <application_Id> 之后就报错

16/06/14 17:58:05 INFO client.RMProxy: Connecting to ResourceManager at DX3-1/172.31.7.78:8032

hdfs://DX3-1:8020/tmp/yarn-log/root/logs/application_1465868977833_0029does not exist.

Log aggregation has not completed or is not enabled.

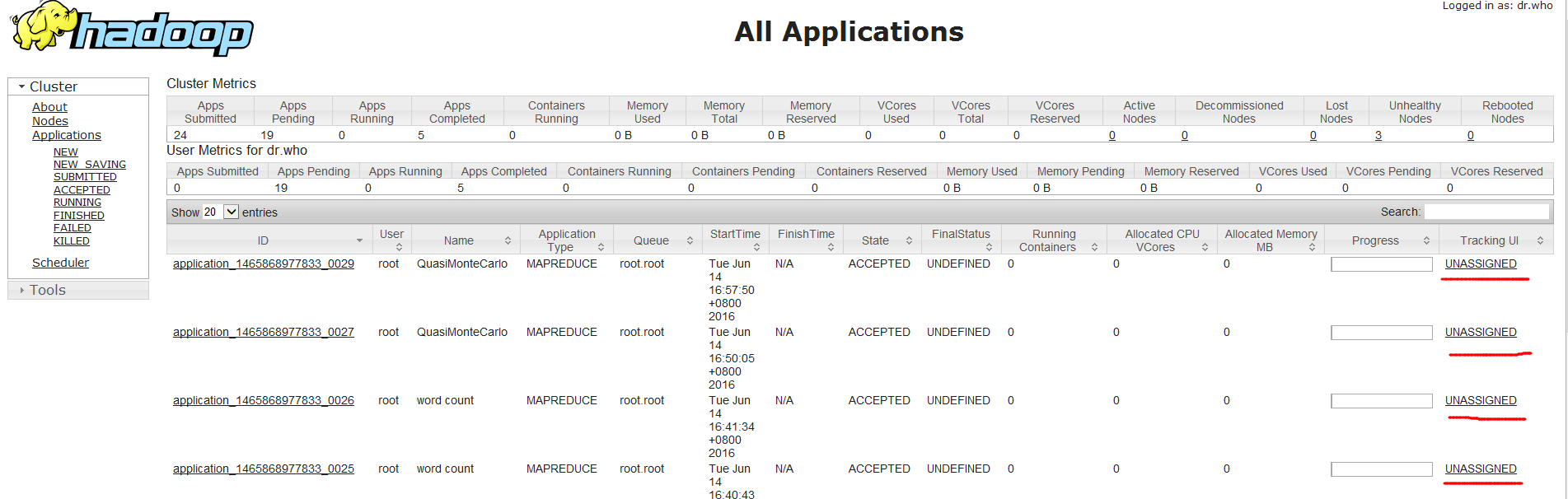

而且我看访问YARN的网页,发现所有的任务都是UNASSIGNED

问题基本描述完了,感觉应该描述的比较全面的。就是有点长,能看完的朋友也真感谢你们了。

这个问题好诡异,关键是之前一直能够正常运行,这几天就突然不行了而且再也没行过。

希望朋友们如果遇到类似问题的能帮助一下,谢谢!!

YARN的web截图

|

|

/2

/2