本帖最后由 yuwenge 于 2018-6-20 20:25 编辑

分析:hive查询方式,从hive 0.10版本之后可以不走MapReduce,所以楼主首先需要明确版本。

1.假如配置为走MapReduce

首先我们来看下面hive sql的执行分析:

[mw_shl_code=sql,true]hive> explain extended select sum(shopid) from shopt1 limit 10;

[/mw_shl_code]

生成的语法解析树AST Tree如下所示:

[mw_shl_code=sql,true]

ABSTRACT SYNTAX TREE:

TOK_QUERY

TOK_FROM

TOK_TABREF

TOK_TABNAME

shopt1 ---表名

TOK_INSERT

TOK_DESTINATION

TOK_DIR

TOK_TMP_FILE ---所有的查询的数据会输出到HDFS的一个暂存文件中

TOK_SELECT

TOK_SELEXPR

TOK_FUNCTION

sum ---获取使用到的函数

TOK_TABLE_OR_COL

shopid ----获取select的列

TOK_LIMIT

10

[/mw_shl_code]

执行计划如下所示:

[mw_shl_code=bash,true]

STAGE DEPENDENCIES:

Stage-1 is a root stage

Stage-0 depends on stages: Stage-1 ----stage-0依赖stage-1

STAGE PLANS:

Stage: Stage-1

Tez

Edges:

Reducer 2 <- Map 1 (SIMPLE_EDGE)

DagName: work_20160918215757_92e7e39d-b52e-49dc-b0dd-d8807b87c7d2:1

Vertices:

Map 1

Map Operator Tree:

TableScan ----扫描shopt1表

alias: shopt1

Statistics: Num rows: 5 Data size: 42 Basic stats: COMPLETE Column stats: NONE

Select Operator -----筛选select到的列

expressions: shopid (type: bigint)

outputColumnNames: shopid

Statistics: Num rows: 5 Data size: 42 Basic stats: COMPLETE Column stats: NONE

Group By Operator ---分组

aggregations: sum(shopid)

mode: hash ---hash分组

outputColumnNames: _col0

Statistics: Num rows: 1 Data size: 8 Basic stats: COMPLETE Column stats: NONE

Reduce Output Operator ---Map端的Reduce过程

sort order:

Statistics: Num rows: 1 Data size: 8 Basic stats: COMPLETE Column stats: NONE

value expressions: _col0 (type: bigint)

Reducer 2

Reduce Operator Tree:

Group By Operator ----reduce端的分组合并

aggregations: sum(VALUE._col0)

mode: mergepartial

outputColumnNames: _col0

Statistics: Num rows: 1 Data size: 8 Basic stats: COMPLETE Column stats: NONE

Select Operator

expressions: _col0 (type: bigint)

outputColumnNames: _col0

Statistics: Num rows: 1 Data size: 8 Basic stats: COMPLETE Column stats: NONE

Limit

Number of rows: 10

Statistics: Num rows: 1 Data size: 8 Basic stats: COMPLETE Column stats: NONE

File Output Operator ---最终文件的输出

compressed: false

Statistics: Num rows: 1 Data size: 8 Basic stats: COMPLETE Column stats: NONE

table:

input format: org.apache.hadoop.mapred.TextInputFormat ---输入

output format: org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat ---输出

serde: org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe --序列化

Stage: Stage-0

Fetch Operator

limit: 10 ---limit 10

Processor Tree:

ListSink

[/mw_shl_code]

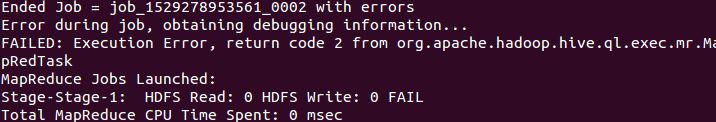

楼主在Stage-1 步就出现了,也就是扫描就出错了。

我们再来看原理图:

可能是在第六步出问题。

2.假如不走MapReduce

也就是不生成MapReduce任务,而是直接扫描hdfs。

我们看到在stage-1 HDFS Read:0

这说明也是读取的时候没有读取成功。

既然没有读取成功,说明hive与Hadoop整合出现问题,当然比如版本是否兼容,配置是否正确。具体错误信息,到日志中查看。

https://blog.csdn.net/u014307117/article/details/52558790

|

/2

/2