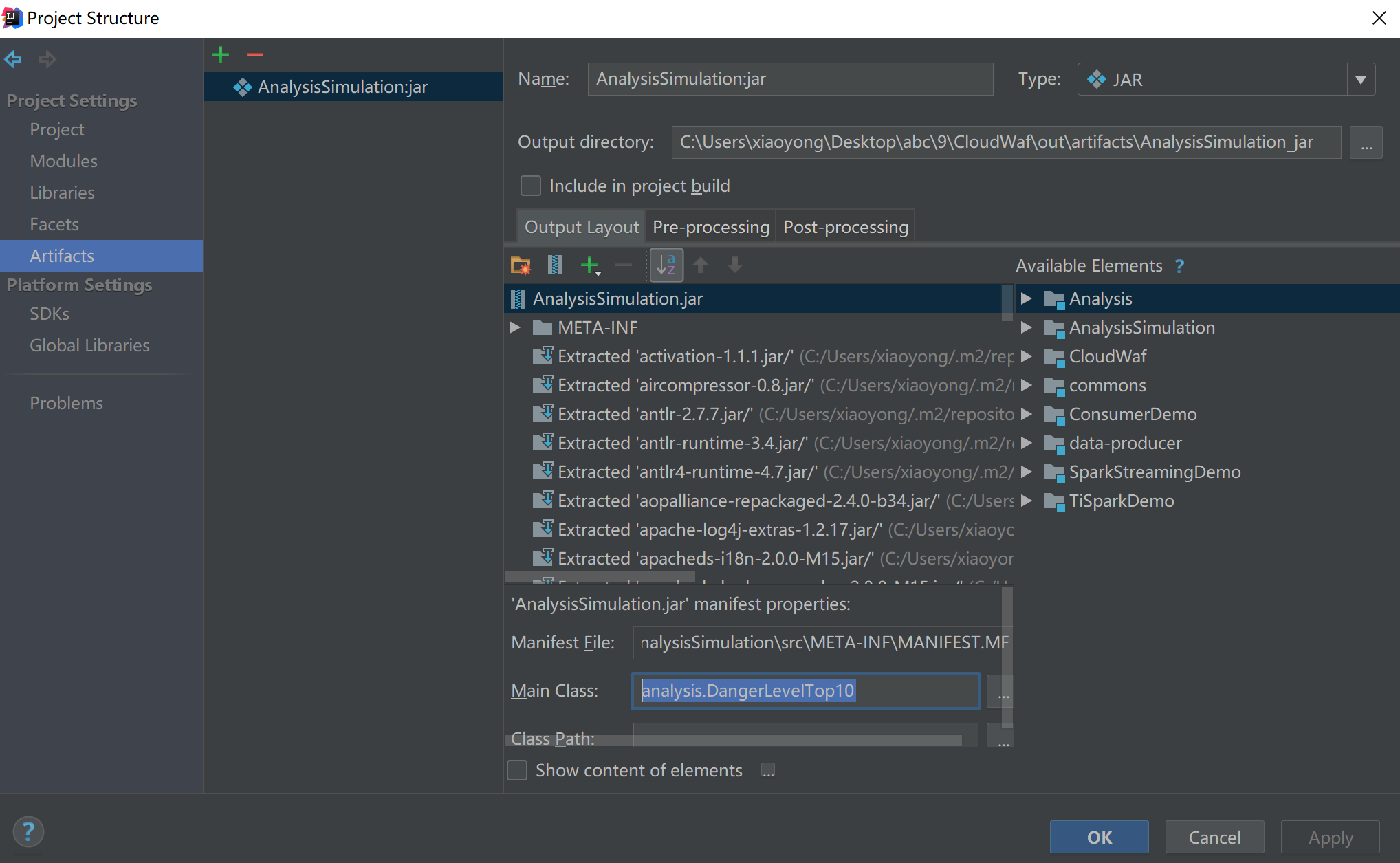

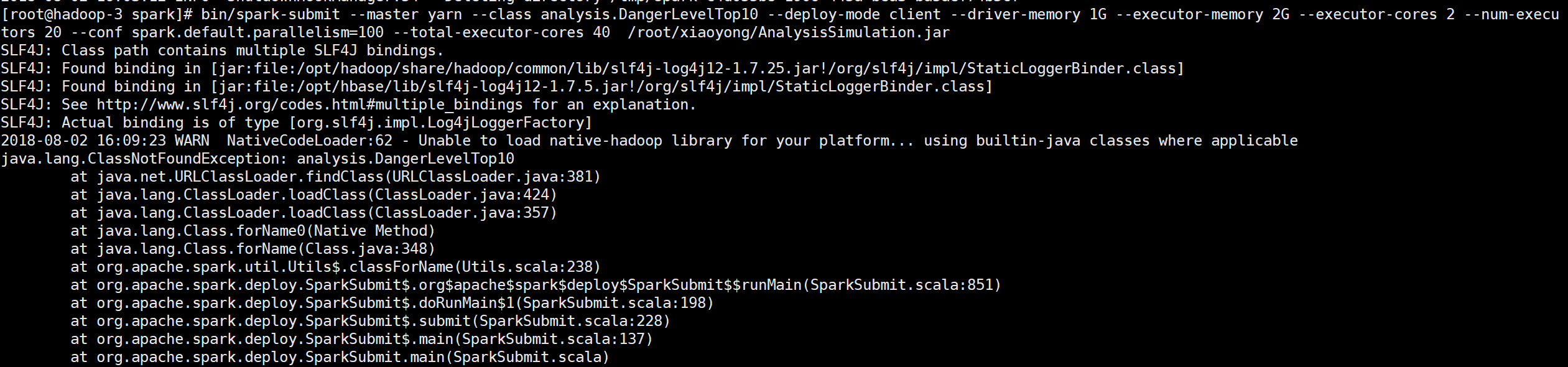

maven项目,语言用的scala,AnalysisSimulation模块依赖commons模块,打包之后运行报ClassNotFoundException: analysis.DangerLevelTop10肯定没有拼错,并且解压jar包可以找到analysis.DangerLevelTop10类。求大神解决,困扰我很多天了

代码没有问题,可以正常运行,但还是贴出来参考

[mw_shl_code=scala,true]package analysis

import analysis.AnalysisSimulation.getSourceIp2CountRDD

import commons.conf.ConfigurationManager

import model.{BizWafBean, MaxSourceIp}

import org.apache.spark.{SparkConf, SparkContext}

import org.apache.spark.rdd.RDD

import org.apache.spark.sql.{SaveMode, SparkSession}

object DangerLevelTop10 {

def main(args: Array[String]): Unit = {

val startTime = System.currentTimeMillis()

val sparkConf = new SparkConf().setMaster("local").setAppName("analyse_optimize")

sparkConf.set("spark.serializer", "org.apache.spark.serializer.KryoSerializer")

val sparkContext = new SparkContext(sparkConf)

val sparkSession = SparkSession.builder().config(sparkConf).getOrCreate()

//读取hadoop或者mysql中的数据

val cloudWafAction = getCloudWafAction(sparkSession)

// cloudWafAction.persist(StorageLevel.MEMORY_AND_DISK_SER)

// 需求二:source_ip 攻击IP最多

val sourceip2CountRDD = getSourceIp2CountRDD(cloudWafAction: RDD[(BizWafBean)]): RDD[(String, Long)]

val maxSourceIp = sourceip2CountRDD.sortBy(_._2, false).take(1)

val maxSourceIp2Count = maxSourceIp.map(item => MaxSourceIp(item._1, item._2))

val maxSourceIp2CountRDD = sparkContext.makeRDD(maxSourceIp2Count)

import sparkSession.implicits._

maxSourceIp2CountRDD.toDF().write

.format("jdbc")

.option("url", ConfigurationManager.config.getString("jdbc.url"))

.option("dbtable", ConfigurationManager.config.getString("mysql.cloud_defense_test_maxsourceip"))

.option("user", ConfigurationManager.config.getString("jdbc.user"))

.option("password", ConfigurationManager.config.getString("jdbc.password"))

.mode(SaveMode.Overwrite)

.save()

val endTime=System.currentTimeMillis()

println(" spend time "+(endTime-startTime)/1000)

}

def getCloudWafAction(sparkSession: SparkSession): RDD[BizWafBean] = {

import sparkSession.implicits._

val cloudWafActionDF = sparkSession.read.parquet("hdfs://192.168.1.112:9000/user/spark/data/parquet/bizWafhistory/part-00000-01911ebb-b750-4021-a2eb-6fc734e0a34c-c000.snappy.parquet")

cloudWafActionDF.as[BizWafBean].rdd

}

}[/mw_shl_code]

帮我解决这个问题,发5元红包表示感谢

|

|

/2

/2