|

问题导读:

1、如何理解Siamese RNN相似性模型架构?

2、如何创建Siamese RNN相似性模型类?

3、如何通过使用一个单位矩阵作为查找矩阵来使用单热编码嵌入?

4、如何声明模型准确性,损失函数?

上一篇:TensorFlow ML cookbook 第九章3、4节 堆叠多个LSTM层及创建序列到序列模型

训练连体相似度

与许多其他模型相比,RNN模型的一大优点是它们可以处理各种长度的序列。 利用这一事实,并且它们可以推广到以前未见的序列,我们可以创建一种方法来测量输入序列之间的相似程度。 在此配方中,我们将训练暹罗相似度RNN来测量地址之间的相似度以进行记录匹配。

做好准备

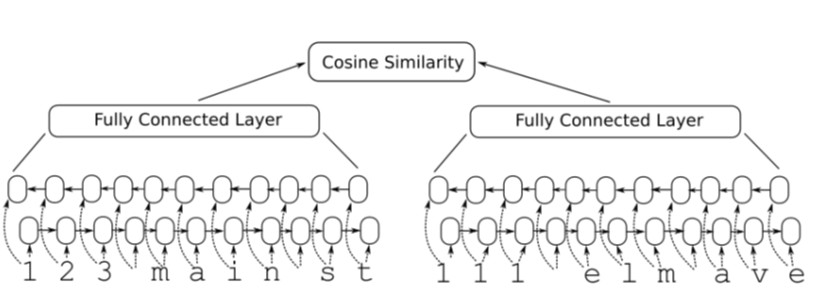

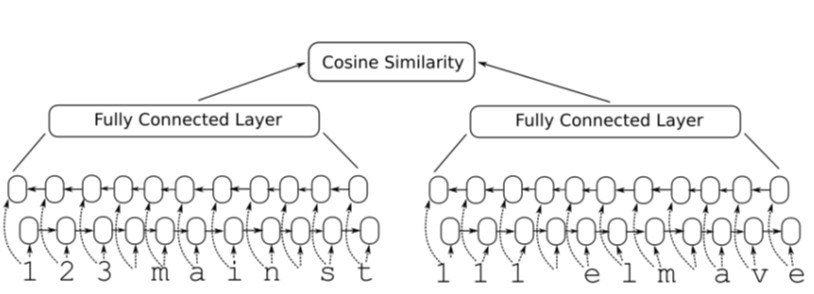

在本食谱中,我们将构建一个双向RNN模型,该模型馈入一个完全连接的层,该层输出固定长度的数值矢量。 我们为两个输入地址创建一个双向RNN层,并将输出馈入一个完全连接的层,该层输出固定长度的数字矢量(长度为100)。 然后,我们比较两个向量输出的余弦距离,该余弦距离的范围是-1和1。我们表示输入数据的目标相似,为1,目标为-1。 余弦距离的预测只是输出的符号(负均值相异,正均值相近)。 我们可以使用该网络通过获取距查询地址的余弦距离得分最高的参考地址来进行记录匹配。 请参见以下网络架构图:

图8:Siamese RNN相似性模型架构。

此模型的另一个优点是,它接受以前从未见过的输入,并且可以将它们与-1到1的输出进行比较。我们将通过选择模型未看到的测试地址在代码中显示此信息。 之前,看看它是否可以将其与相似的地址匹配。

如何做

1.我们首先加载必要的库并开始一个图形会话:

[mw_shl_code=python,true]import os

import random

import string

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

sess = tf.Session() [/mw_shl_code]

2.我们现在设置模型参数如下:

[mw_shl_code=python,true]batch_size = 200

n_batches = 300

max_address_len = 20

margin = 0.25

num_features = 50

dropout_keep_prob = 0.8 [/mw_shl_code]

3,接下来,我们如下创建Siamese RNN相似性模型类:

[mw_shl_code=python,true]def snn(address1, address2, dropout_keep_prob,

vocab_size, num_features, input_length):

# Define the siamese double RNN with a fully connected layer at the end

def siamese_nn(input_vector, num_hidden):

cell_unit = tf.nn.rnn_cell.BasicLSTMCell

# Forward direction cell

lstm_forward_cell = cell_unit(num_hidden, forget_bias=1.0)

lstm_forward_cell = tf.nn.rnn_cell.DropoutWrapper(lstm_ forward_cell, output_keep_prob=dropout_keep_prob)

# Backward direction cell

lstm_backward_cell = cell_unit(num_hidden, forget_ bias=1.0)

lstm_backward_cell = tf.nn.rnn_cell.DropoutWrapper(lstm_ backward_cell, output_keep_prob=dropout_keep_prob)

# Split title into a character sequence

input_embed_split = tf.split(1, input_length, input_ vector)

input_embed_split = [tf.squeeze(x, squeeze_dims=[1]) for x in input_embed_split]

# Create bidirectional layer

outputs, _, _ = tf.nn.bidirectional_rnn(lstm_forward_cell,

lstm_backward_ cell,

input_embed_split,

dtype=tf.float32)

# Average The output over the sequence

temporal_mean = tf.add_n(outputs) / input_length

# Fully connected layer

output_size = 10

A = tf.get_variable(name="A", shape=[2*num_hidden, output_ size],

dtype=tf.float32,

initializer=tf.random_normal_ initializer(stddev=0.1))

b = tf.get_variable(name="b", shape=[output_size], dtype=tf.float32,

initializer=tf.random_normal_ initializer(stddev=0.1))

final_output = tf.matmul(temporal_mean, A) + b

final_output = tf.nn.dropout(final_output, dropout_keep_ prob)

return(final_output)

with tf.variable_scope("siamese") as scope:

output1 = siamese_nn(address1, num_features)

# Declare that we will use the same variables on the second string

scope.reuse_variables()

output2 = siamese_nn(address2, num_features)

# Unit normalize the outputs

output1 = tf.nn.l2_normalize(output1, 1)

output2 = tf.nn.l2_normalize(output2, 1)

# Return cosine distance

# in this case, the dot product of the norms is the same.

dot_prod = tf.reduce_sum(tf.mul(output1, output2), 1)

return(dot_prod)[/mw_shl_code]

4,现在我们将声明我们的预测函数,它只是余弦距离的符号,如下所示:[mw_shl_code=python,true]def get_predictions(scores):

predictions = tf.sign(scores, name="predictions")

return(predictions)

[/mw_shl_code]

5,现在我们将如前所述声明损失函数。 请记住,我们要留出一定的误差(类似于SVM模型)。 我们还将对真实的正面和负面的名词有一个损失术语。 使用以下代码进行损失:

[mw_shl_code=python,true]def loss(scores, y_target, margin):

# Calculate the positive losses

pos_loss_term = 0.25 * tf.square(tf.sub(1., scores))

pos_mult = tf.cast(y_target, tf.float32)

# Make sure positive losses are on similar strings

positive_loss = tf.mul(pos_mult, pos_loss_term)

# Calculate negative losses, then make sure on dissimilar strings

neg_mult = tf.sub(1., tf.cast(y_target, tf.float32))

negative_loss = neg_mult*tf.square(scores)

# Combine similar and dissimilar losses

loss = tf.add(positive_loss, negative_loss)

# Create the margin term. This is when the targets are 0., and the scores are less than m, return 0.

# Check if target is zero (dissimilar strings)

target_zero = tf.equal(tf.cast(y_target, tf.float32), 0.)

# Check if cosine outputs is smaller than margin

less_than_margin = tf.less(scores, margin)

# Check if both are true

both_logical = tf.logical_and(target_zero, less_than_margin)

both_logical = tf.cast(both_logical, tf.float32)

# If both are true, then multiply by (1-1)=0.

multiplicative_factor = tf.cast(1. - both_logical, tf.float32)

total_loss = tf.mul(loss, multiplicative_factor)

# Average loss over batch

avg_loss = tf.reduce_mean(total_loss)

return(avg_loss) [/mw_shl_code]

6.我们声明一个精度函数如下:

[mw_shl_code=python,true]def accuracy(scores, y_target):

predictions = get_predictions(scores)

correct_predictions = tf.equal(predictions, y_target)

accuracy = tf.reduce_mean(tf.cast(correct_predictions, tf.float32))

return(accuracy) [/mw_shl_code]

7.我们将通过在地址中输入错字来创建类似的地址。 我们将这些地址(参考地址和错字地址)表示为相似的:

[mw_shl_code=python,true]def create_typo(s):

rand_ind = random.choice(range(len(s)))

s_list = list(s)

s_list[rand_ind]=random.choice(string.ascii_lowercase + '0123456789')

s = ''.join(s_list)

return(s) [/mw_shl_code]

8,我们将生成的数据将是街道编号,街道名称和街道后缀的随机组合。 名称和后缀来自以下列表:

[mw_shl_code=python,true]street_names = ['abbey', 'baker', 'canal', 'donner', 'elm', 'fifth', 'grandvia', 'hollywood', 'interstate', 'jay', 'kings']

street_types = ['rd', 'st', 'ln', 'pass', 'ave', 'hwy', 'cir', 'dr', 'jct'] [/mw_shl_code]

9.我们生成测试查询和引用,如下所示:

[mw_shl_code=python,true]test_queries = ['111 abbey ln', '271 doner cicle',

'314 king avenue', 'tensorflow is fun']

test_references = ['123 abbey ln', '217 donner cir', '314 kings ave', '404 hollywood st', 'tensorflow is so fun'][/mw_shl_code]

请注意,最后一个查询和引用不是该模型以前见过的任何地址,但我们希望它们将是模型最后看起来最相似的地址。

10.现在,我们将定义如何生成一批数据。 我们这批数据将是一半相似的地址(参考地址和错字地址)和一半相似的地址。 我们通过获取地址列表的一半并将目标移动一个位置(使用numpy.roll()函数)来生成不同的地址:

[mw_shl_code=python,true]def get_batch(n):

# Generate a list of reference addresses with similar addresses that have

# a typo.

numbers = [random.randint(1, 9999) for i in range(n)]

streets = [random.choice(street_names) for i in range(n)]

street_suffs = [random.choice(street_types) for i in range(n)]

full_streets = [str(w) + ' ' + x + ' ' + y for w,x,y in zip(numbers, streets, street_suffs)]

typo_streets = [create_typo(x) for x in full_streets]

reference = [list(x) for x in zip(full_streets, typo_streets)]

# Shuffle last half of them for training on dissimilar addresses

half_ix = int(n/2)

bottom_half = reference[half_ix:]

true_address = [x[0] for x in bottom_half]

typo_address = [x[1] for x in bottom_half]

typo_address = list(np.roll(typo_address, 1))

bottom_half = [[x,y] for x,y in zip(true_address, typo_ address)]

reference[half_ix:] = bottom_half

# Get target similarities (1's for similar, -1's for non-similar)

target = [1]*(n-half_ix) + [-1]*half_ix

reference = [[x,y] for x,y in zip(reference, target)]

return(reference) [/mw_shl_code]

11,接下来,我们将定义地址词汇以及如何将地址单编码为索引:

[mw_shl_code=python,true]vocab_chars = string.ascii_lowercase + '0123456789 '

vocab2ix_dict = {char:(ix+1) for ix, char in enumerate(vocab_ chars)}

vocab_length = len(vocab_chars) + 1

# Define vocab one-hot encoding

def address2onehot(address,

vocab2ix_dict = vocab2ix_dict,

max_address_len = max_address_len):

# translate address string into indices

address_ix = [vocab2ix_dict[x] for x in list(address)]

# Pad or crop to max_address_len

address_ix = (address_ix + [0]*max_address_len)[0:max_address_ len]

return(address_ix) [/mw_shl_code]

12.处理完词汇后,我们将开始声明模型占位符和嵌入查找。 对于嵌入查找,我们将通过使用一个单位矩阵作为查找矩阵来使用单热编码嵌入。 使用以下代码:

[mw_shl_code=python,true]address1_ph = tf.placeholder(tf.int32, [None, max_address_len], name="address1_ph")

address2_ph = tf.placeholder(tf.int32, [None, max_address_len], name="address2_ph")

y_target_ph = tf.placeholder(tf.int32, [None], name="y_target_ph")

dropout_keep_prob_ph = tf.placeholder(tf.float32, name="dropout_ keep_prob")

# Create embedding lookup

identity_mat = tf.diag(tf.ones(shape=[vocab_length]))

address1_embed = tf.nn.embedding_lookup(identity_mat, address1_ph)

address2_embed = tf.nn.embedding_lookup(identity_mat, address2_ph) [/mw_shl_code]

13.现在,我们将声明模型,准确性,损失和预测操作,如下所示:

[mw_shl_code=python,true]# Define Model

text_snn = model.snn(address1_embed, address2_embed, dropout_keep_ prob_ph,

vocab_length, num_features, max_address_len)

# Define Accuracy

batch_accuracy = model.accuracy(text_snn, y_target_ph)

# Define Loss

batch_loss = model.loss(text_snn, y_target_ph, margin)

# Define Predictions

predictions = model.get_predictions(text_snn) [/mw_shl_code]

14,最后,在开始训练之前,我们将优化和初始化操作添加到图中,如下所示:[mw_shl_code=python,true]# Declare optimizer

optimizer = tf.train.AdamOptimizer(0.01)

# Apply gradients

train_op = optimizer.minimize(batch_loss)

# Initialize Variables

init = tf.global_variables_initializer()

sess.run(init)

[/mw_shl_code]

15,我们现在将遍历几遍并跟踪损失和准确性:

[mw_shl_code=python,true]train_loss_vec = []

train_acc_vec = []

for b in range(n_batches):

# Get a batch of data

batch_data = get_batch(batch_size)

# Shuffle data

np.random.shuffle(batch_data)

# Parse addresses and targets

input_addresses = [x[0] for x in batch_data]

target_similarity = np.array([x[1] for x in batch_data])

address1 = np.array([address2onehot(x[0]) for x in input_ addresses])

address2 = np.array([address2onehot(x[1]) for x in input_ addresses])

train_feed_dict = {address1_ph: address1,

address2_ph: address2,

y_target_ph: target_similarity,

dropout_keep_prob_ph: dropout_keep_prob}

_, train_loss, train_acc = sess.run([train_op, batch_loss, batch_accuracy],

feed_dict=train_feed_dict)

# Save train loss and accuracy

train_loss_vec.append(train_loss)

train_acc_vec.append(train_acc) [/mw_shl_code]

16.经过培训后,我们现在处理测试查询和参考,以查看模型如何执行:

[mw_shl_code=python,true]test_queries_ix = np.array([address2onehot(x) for x in test_ queries])

test_references_ix = np.array([address2onehot(x) for x in test_ references])

num_refs = test_references_ix.shape[0]

best_fit_refs = []

for query in test_queries_ix:

test_query = np.repeat(np.array([query]), num_refs, axis=0)

test_feed_dict = {address1_ph: test_query,

address2_ph: test_references_ix,

y_target_ph: target_similarity,

dropout_keep_prob_ph: 1.0}

test_out = sess.run(text_snn, feed_dict=test_feed_dict)

best_fit = test_references[np.argmax(test_out)]

best_fit_refs.append(best_fit)

print('Query Addresses: {}'.format(test_queries))

print('Model Found Matches: {}'.format(best_fit_refs)) [/mw_shl_code]

17.这将产生以下输出:

[mw_shl_code=python,true]Query Addresses: ['111 abbey ln', '271 doner cicle', '314 king avenue', 'tensorflow is fun']

Model Found Matches:['123 abbey ln', '217 donner cir', '314 kings ave', 'tensorflow is so fun'] [/mw_shl_code]

还有更多…

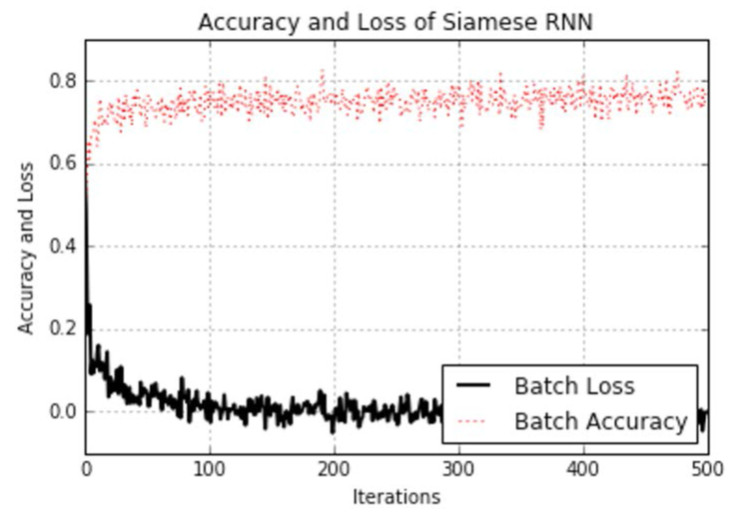

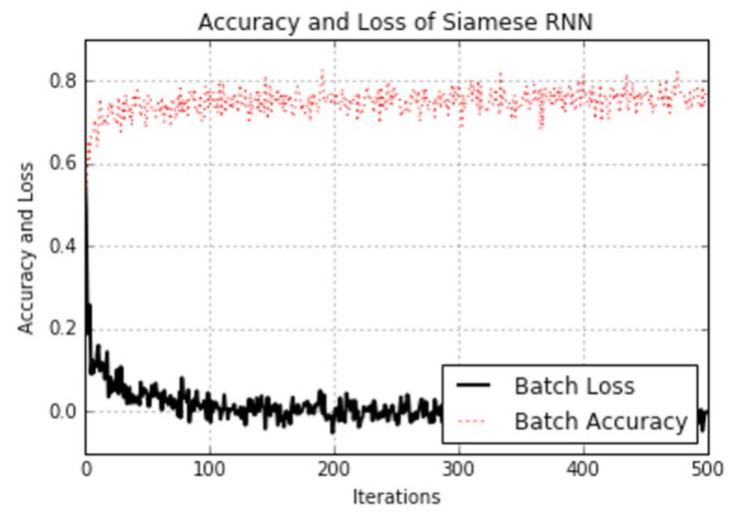

从测试查询和引用中我们可以看到,该模型不仅能够识别正确的引用地址,而且还可以泛化为非地址短语。 我们还可以通过查看训练期间的损失和准确性来查看模型的性能:

图9:训练过程中Siamese RNN相似性模型的准确性和损失。

请注意,我们没有为此练习指定的测试集。 原因是由于我们如何生成数据。 我们创建了一个批处理函数,该函数在每次调用时都会创建新的批处理数据,因此模型始终会看到新数据。 因此,我们可以使用批次损失和准确性作为测试损失和准确性的代理。 但是,对于有限的真实数据,这绝不是正确的,因为我们总是必须拥有训练和测试集来判断模型的性能。

原文:

Training a Siamese Similarity Measure

A great property of RNN models, as compared to many other models, is that they can deal with sequences of various lengths. Taking advantage of this fact and that they can generalize to sequences not seen before, we can create a way to measure how similar sequences of inputs are to each other. In this recipe, we will train a Siamese similarity RNN to measure the similarity between addresses for record matching.

Getting ready

In this recipe, we will build a bidirectional RNN model that feeds into a fully connected layer that outputs a fixed length numerical vector. We create a bidirectional RNN layer for both input addresses and feed the outputs into a fully connected layer that outputs a fixed length numerical vector (length 100). We then compare the two vector outputs with the cosine distance, which is bounded between -1 and 1. We denote input data to be similar with a target of 1, and different with a target of -1. The predictions of the cosine distance is just the sign of the output (negative means dissimilar, positive means similar). We can use this network to do record matching by taking the reference address that scores the highest on the cosine distance from the query address. See the following network architecture diagram:

Figure 8: Siamese RNN similarity model architecture.

What is also great about this model, is that it accepts inputs that it has not seen before and can compare them with an output of -1 to 1. We will show this in the code by picking a test address that the model has not seen before and see if it can match it to a similar address.

How to do it…

1.We start by loading the necessary libraries and starting a graph session:

import os

import random

import string

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

sess = tf.Session()

2.We now set the model parameters as follows:

batch_size = 200

n_batches = 300

max_address_len = 20

margin = 0.25

num_features = 50

dropout_keep_prob = 0.8

3.Next, we create the Siamese RNN similarity model class as follows:

def snn(address1, address2, dropout_keep_prob,

vocab_size, num_features, input_length):

# Define the siamese double RNN with a fully connected layer at the end

def siamese_nn(input_vector, num_hidden):

cell_unit = tf.nn.rnn_cell.BasicLSTMCell

# Forward direction cell

lstm_forward_cell = cell_unit(num_hidden, forget_bias=1.0)

lstm_forward_cell = tf.nn.rnn_cell.DropoutWrapper(lstm_ forward_cell, output_keep_prob=dropout_keep_prob)

# Backward direction cell

lstm_backward_cell = cell_unit(num_hidden, forget_ bias=1.0)

lstm_backward_cell = tf.nn.rnn_cell.DropoutWrapper(lstm_ backward_cell, output_keep_prob=dropout_keep_prob)

# Split title into a character sequence

input_embed_split = tf.split(1, input_length, input_ vector)

input_embed_split = [tf.squeeze(x, squeeze_dims=[1]) for x in input_embed_split]

# Create bidirectional layer

outputs, _, _ = tf.nn.bidirectional_rnn(lstm_forward_cell,

lstm_backward_ cell,

input_embed_split,

dtype=tf.float32)

# Average The output over the sequence

temporal_mean = tf.add_n(outputs) / input_length

# Fully connected layer

output_size = 10

A = tf.get_variable(name="A", shape=[2*num_hidden, output_ size],

dtype=tf.float32,

initializer=tf.random_normal_ initializer(stddev=0.1))

b = tf.get_variable(name="b", shape=[output_size], dtype=tf.float32,

initializer=tf.random_normal_ initializer(stddev=0.1))

final_output = tf.matmul(temporal_mean, A) + b

final_output = tf.nn.dropout(final_output, dropout_keep_ prob)

return(final_output)

with tf.variable_scope("siamese") as scope:

output1 = siamese_nn(address1, num_features)

# Declare that we will use the same variables on the second string

scope.reuse_variables()

output2 = siamese_nn(address2, num_features)

# Unit normalize the outputs

output1 = tf.nn.l2_normalize(output1, 1)

output2 = tf.nn.l2_normalize(output2, 1)

# Return cosine distance

# in this case, the dot product of the norms is the same.

dot_prod = tf.reduce_sum(tf.mul(output1, output2), 1)

return(dot_prod)

4.Now we will declare our prediction function, which is just the sign of the cosine distance as follows:

def get_predictions(scores):

predictions = tf.sign(scores, name="predictions")

return(predictions)

5.Now we will declare our loss function as described before. Remember that we want to leave a margin (similar to a SVM model) for error. We will also have a loss term for true positives and true negatives. Use the following code for the loss:

def loss(scores, y_target, margin):

# Calculate the positive losses

pos_loss_term = 0.25 * tf.square(tf.sub(1., scores))

pos_mult = tf.cast(y_target, tf.float32)

# Make sure positive losses are on similar strings

positive_loss = tf.mul(pos_mult, pos_loss_term)

# Calculate negative losses, then make sure on dissimilar strings

neg_mult = tf.sub(1., tf.cast(y_target, tf.float32))

negative_loss = neg_mult*tf.square(scores)

# Combine similar and dissimilar losses

loss = tf.add(positive_loss, negative_loss)

# Create the margin term. This is when the targets are 0., and the scores are less than m, return 0.

# Check if target is zero (dissimilar strings)

target_zero = tf.equal(tf.cast(y_target, tf.float32), 0.)

# Check if cosine outputs is smaller than margin

less_than_margin = tf.less(scores, margin)

# Check if both are true

both_logical = tf.logical_and(target_zero, less_than_margin)

both_logical = tf.cast(both_logical, tf.float32)

# If both are true, then multiply by (1-1)=0.

multiplicative_factor = tf.cast(1. - both_logical, tf.float32)

total_loss = tf.mul(loss, multiplicative_factor)

# Average loss over batch

avg_loss = tf.reduce_mean(total_loss)

return(avg_loss)

6.We declare an accuracy function as follows:

def accuracy(scores, y_target):

predictions = get_predictions(scores)

correct_predictions = tf.equal(predictions, y_target)

accuracy = tf.reduce_mean(tf.cast(correct_predictions, tf.float32))

return(accuracy)

7.We will create similar addresses by creating a typo in an address. We will denote these addresses (reference address and typo address) as similar:

def create_typo(s):

rand_ind = random.choice(range(len(s)))

s_list = list(s)

s_list[rand_ind]=random.choice(string.ascii_lowercase + '0123456789')

s = ''.join(s_list)

return(s)

8.The data that we will generate will be random combinations of street numbers, street names, and street suffixes. The names and suffixes are from the following lists:

street_names = ['abbey', 'baker', 'canal', 'donner', 'elm', 'fifth', 'grandvia', 'hollywood', 'interstate', 'jay', 'kings']

street_types = ['rd', 'st', 'ln', 'pass', 'ave', 'hwy', 'cir', 'dr', 'jct']

9.We generate test queries and references as follows:

test_queries = ['111 abbey ln', '271 doner cicle',

'314 king avenue', 'tensorflow is fun']

test_references = ['123 abbey ln', '217 donner cir', '314 kings ave', '404 hollywood st', 'tensorflow is so fun']

Note the last query and reference are not any addresses that the model will have seen before, but we hope that they will be what the model sees as most similar at the end.

10.We will now define how to generate a batch of data. Our batch of data will be half similar addresses (reference address and a typo address) and half dissimilar addresses. We generate the dissimilar addresses by taking half of the address list and shifting the targets by one position (with the function numpy.roll()):

def get_batch(n):

# Generate a list of reference addresses with similar addresses that have

# a typo.

numbers = [random.randint(1, 9999) for i in range(n)]

streets = [random.choice(street_names) for i in range(n)]

street_suffs = [random.choice(street_types) for i in range(n)]

full_streets = [str(w) + ' ' + x + ' ' + y for w,x,y in zip(numbers, streets, street_suffs)]

typo_streets = [create_typo(x) for x in full_streets]

reference = [list(x) for x in zip(full_streets, typo_streets)]

# Shuffle last half of them for training on dissimilar addresses

half_ix = int(n/2)

bottom_half = reference[half_ix:]

true_address = [x[0] for x in bottom_half]

typo_address = [x[1] for x in bottom_half]

typo_address = list(np.roll(typo_address, 1))

bottom_half = [[x,y] for x,y in zip(true_address, typo_ address)]

reference[half_ix:] = bottom_half

# Get target similarities (1's for similar, -1's for non-similar)

target = [1]*(n-half_ix) + [-1]*half_ix

reference = [[x,y] for x,y in zip(reference, target)]

return(reference)

11.Next, we will define our address vocabulary and how to one-hot-encode the addresses to indices:

vocab_chars = string.ascii_lowercase + '0123456789 '

vocab2ix_dict = {char:(ix+1) for ix, char in enumerate(vocab_ chars)}

vocab_length = len(vocab_chars) + 1

# Define vocab one-hot encoding

def address2onehot(address,

vocab2ix_dict = vocab2ix_dict,

max_address_len = max_address_len):

# translate address string into indices

address_ix = [vocab2ix_dict[x] for x in list(address)]

# Pad or crop to max_address_len

address_ix = (address_ix + [0]*max_address_len)[0:max_address_ len]

return(address_ix)

12.After dealing with the vocabulary, we will start declaring our model placeholders and the embedding lookup. For the embedding lookup, we will be using one-hot-encoded embedding by using an identity matrix as the lookup matrix. Use the following code:

address1_ph = tf.placeholder(tf.int32, [None, max_address_len], name="address1_ph")

address2_ph = tf.placeholder(tf.int32, [None, max_address_len], name="address2_ph")

y_target_ph = tf.placeholder(tf.int32, [None], name="y_target_ph")

dropout_keep_prob_ph = tf.placeholder(tf.float32, name="dropout_ keep_prob")

# Create embedding lookup

identity_mat = tf.diag(tf.ones(shape=[vocab_length]))

address1_embed = tf.nn.embedding_lookup(identity_mat, address1_ph)

address2_embed = tf.nn.embedding_lookup(identity_mat, address2_ph)

13.We will now declare the model, accuracy, loss, and prediction operations as follows:

# Define Model

text_snn = model.snn(address1_embed, address2_embed, dropout_keep_ prob_ph,

vocab_length, num_features, max_address_len)

# Define Accuracy

batch_accuracy = model.accuracy(text_snn, y_target_ph)

# Define Loss

batch_loss = model.loss(text_snn, y_target_ph, margin)

# Define Predictions

predictions = model.get_predictions(text_snn)

14.Finally, before we can start training, we add the optimization and initialization operations to the graph as follows:

# Declare optimizer

optimizer = tf.train.AdamOptimizer(0.01)

# Apply gradients

train_op = optimizer.minimize(batch_loss)

# Initialize Variables

init = tf.global_variables_initializer()

sess.run(init)

15.We will now iterate through the training generations and keep track of the loss and accuracy:

train_loss_vec = []

train_acc_vec = []

for b in range(n_batches):

# Get a batch of data

batch_data = get_batch(batch_size)

# Shuffle data

np.random.shuffle(batch_data)

# Parse addresses and targets

input_addresses = [x[0] for x in batch_data]

target_similarity = np.array([x[1] for x in batch_data])

address1 = np.array([address2onehot(x[0]) for x in input_ addresses])

address2 = np.array([address2onehot(x[1]) for x in input_ addresses])

train_feed_dict = {address1_ph: address1,

address2_ph: address2,

y_target_ph: target_similarity,

dropout_keep_prob_ph: dropout_keep_prob}

_, train_loss, train_acc = sess.run([train_op, batch_loss, batch_accuracy],

feed_dict=train_feed_dict)

# Save train loss and accuracy

train_loss_vec.append(train_loss)

train_acc_vec.append(train_acc)

16.After training, we now process the testing queries and references to see how the model can perform:

test_queries_ix = np.array([address2onehot(x) for x in test_ queries])

test_references_ix = np.array([address2onehot(x) for x in test_ references])

num_refs = test_references_ix.shape[0]

best_fit_refs = []

for query in test_queries_ix:

test_query = np.repeat(np.array([query]), num_refs, axis=0)

test_feed_dict = {address1_ph: test_query,

address2_ph: test_references_ix,

y_target_ph: target_similarity,

dropout_keep_prob_ph: 1.0}

test_out = sess.run(text_snn, feed_dict=test_feed_dict)

best_fit = test_references[np.argmax(test_out)]

best_fit_refs.append(best_fit)

print('Query Addresses: {}'.format(test_queries))

print('Model Found Matches: {}'.format(best_fit_refs))

17.This results in the following output:

Query Addresses: ['111 abbey ln', '271 doner cicle', '314 king avenue', 'tensorflow is fun']

Model Found Matches:['123 abbey ln', '217 donner cir', '314 kings ave', 'tensorflow is so fun']

There's more…

We can see from the test queries and references, that the model not only was able to identify the correct reference addresses, it was also able to generalize to a non-address phrase. We can also see how the model performed by looking at the loss and accuracy during training:

Figure 9: The accuracy and loss for the Siamese RNN similarity model during training.

Notice that we did not have a designated test set for this exercise. The reason is because of how we generated the data. We created a batch function that creates new batch data every time it is called so the model is always seeing new data. Because of this, we can use the batch loss and accuracy as proxies to the test loss and accuracies. But this is never true with a finite set of real data, as we would always have to have a train and test set to judge the performance of the model.

|

|

/2

/2