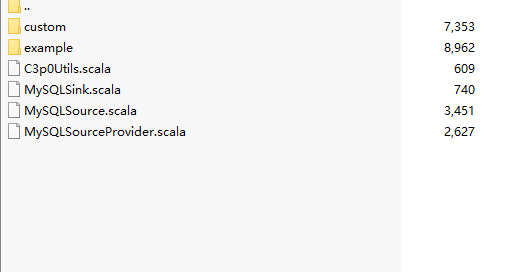

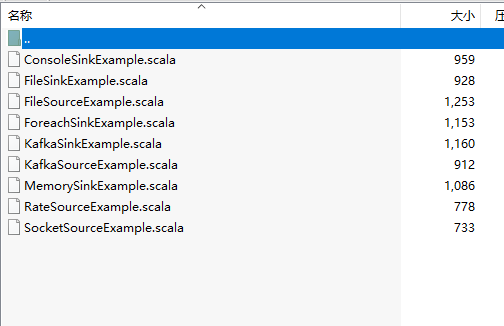

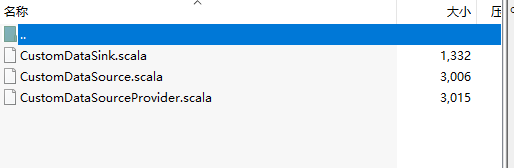

StructuredStreaming 内置数据源及实现自定义数据源【代码及详解】 |

| ||||||||||||||||||||||||||||||||||||||||||||||||||||||||

没找到任何评论,期待你打破沉寂

/2

/2

Copyright © 2001-2025 About云-梭伦科技 Powered by Discuz! X3.4 Licensed Discuz Team.

简书 /

![]() 京ICP备2020039040号

京ICP备2020039040号

![]() 简书网举报电话:021-34700000

简书网举报电话:021-34700000