上一篇:ClickHouse源码阅读计划(三)物化视图的概念、场景、用法和源码实现

问题导读:

1、如何理解Bloom Filter 的原理?

2、Bloom Filter 数据结构如何实现?

3、Skipping Index如何应用?

4、两种Bloom Filter MergeTree Index都是什么?

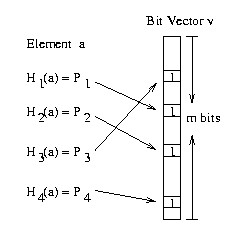

Bloom Filter 的原理

假设有集合 A = {a1,a2....an}

分配一个m个bits大小的vector,初始所有bit都设为0,然后选择k个独立的hash函数h1,h2,h3....hk,值域为{1....m}。

对于每个 [公式] , h1(a).....hk(a)分别对应的bit设为1。

然后在查询b是否存在于bloom filter的时候,通过检查h1(b)....hk(b)的bit,如果任意一个bit为0,则b肯定不存在于集合A中。否则我们可以判断b可能存在于集合A中,存在一定的判断错误率,即False Positive。

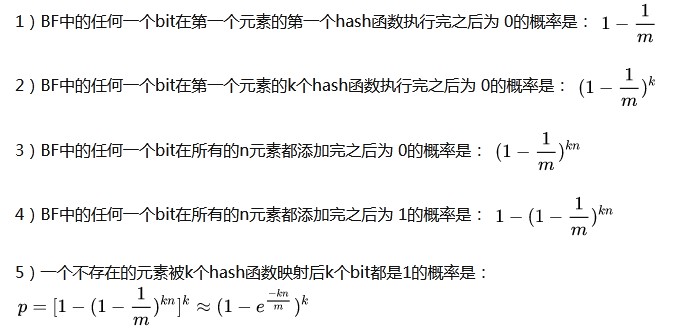

当往一个m bits大小的bf插入n个key后,误判率怎么计算?

False Positive概率为:

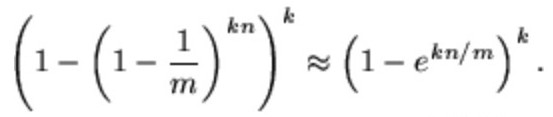

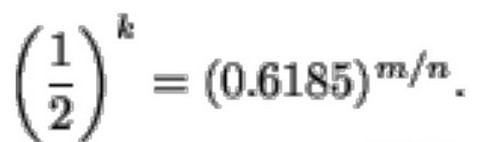

对右边求导,最小值成立条件为:

在m,n固定的情况下,误判率的取值取决于k,肯定有一个k的取值使得p的值最小,这就是k的最优值。

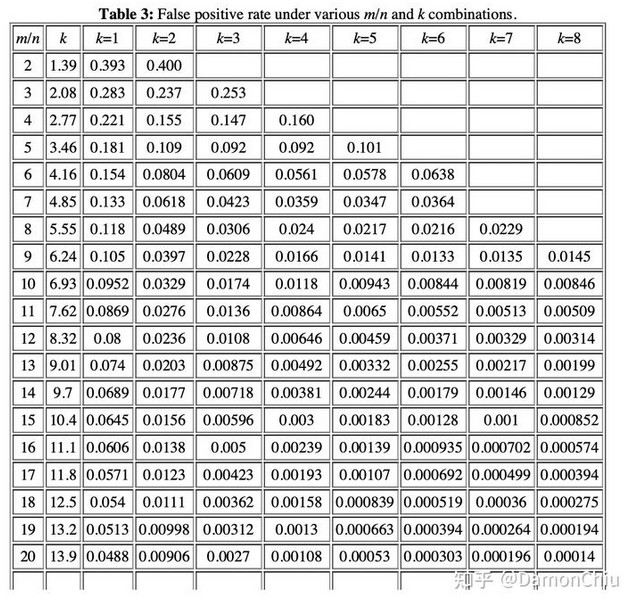

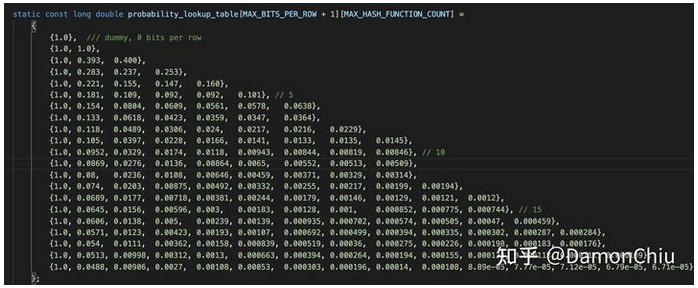

如下表格就展示了不同m/n和k的误判率

这也是src/Interpreters/BloomFilterHash.h的 calculationBestPractices函数中的lookup table的数学原理。calculationBestPractices的作用就是根据创建bloom_filter的时候根据用户传入的误判率的大小反推出该bf的最佳k和m/n取值。其中m/n即bits _ per_ row, k即hash function count。

CK中的应用

Bloom Filter 数据结构的实现

核心是一个Uint64类型的Vector + 若干个 Hash Function

- using UnderType = UInt64;

- using Container = std::vector<UnderType>;

-

- size_t size;

- size_t hashes;

- size_t seed;

- size_t words;

- Container filter;

构建bloom filter的三个关键参数:

bf的大小(in bytes),映射的哈希函数个数和生成哈希函数的random seed。

- struct BloomFilterParameters

- {

- BloomFilterParameters(size_t filter_size_, size_t filter_hashes_, size_t seed_);

-

- /// size of filter in bytes.

- size_t filter_size;

- /// number of used hash functions.

- size_t filter_hashes;

- /// random seed for hash functions generation.

- size_t seed;

- };

words 表示bf存储的元素个数

- BloomFilter::BloomFilter(size_t size_, size_t hashes_, size_t seed_)

- : size(size_), hashes(hashes_), seed(seed_), words((size + sizeof(UnderType) - 1) / sizeof(UnderType)), filter(words, 0)

- {

- assert(size != 0);

- assert(hashes != 0);

- }

- bool BloomFilter::find(const char * data, size_t len)

- {

- size_t hash1 = CityHash_v1_0_2::CityHash64WithSeed(data, len, seed);

- size_t hash2 = CityHash_v1_0_2::CityHash64WithSeed(data, len, SEED_GEN_A * seed + SEED_GEN_B);

-

- for (size_t i = 0; i < hashes; ++i)

- {

- size_t pos = (hash1 + i * hash2 + i * i) % (8 * size);

- if (!(filter[pos / (8 * sizeof(UnderType))] & (1ULL << (pos % (8 * sizeof(UnderType))))))

- return false;

- }

- return true;

- }

-

- void BloomFilter::add(const char * data, size_t len)

- {

- size_t hash1 = CityHash_v1_0_2::CityHash64WithSeed(data, len, seed);

- size_t hash2 = CityHash_v1_0_2::CityHash64WithSeed(data, len, SEED_GEN_A * seed + SEED_GEN_B);

-

- for (size_t i = 0; i < hashes; ++i)

- {

- size_t pos = (hash1 + i * hash2 + i * i) % (8 * size);

- filter[pos / (8 * sizeof(UnderType))] |= (1ULL << (pos % (8 * sizeof(UnderType))));

- }

- }

从上述函数可以看出Hash函数映射的规则为:

先计算出元素经过hash映射后bit的偏移量,计算规则为:

先由cityhash64生成两个hash值:hash1,hash2;

bit 偏移量 = (hash1 + i * hash2 + i * i) ,然后对bitmap vector的bit大小取模

然后再计算出在Vector中word的偏移量:pos /(8*sizeof(UnderType))

然后该偏移量对应的值与 `(1ULL << (pos % (8 * sizeof(UnderType))` 做异或操作

总结起来就是:每个word包含64bit,先定位到word在Vector中的偏移量,再通过|=操作来修改word内bit的01分布情况。

而addHashWithSeed与findHashWithSeed 方法类似,只是计算bit偏移量的方式略有不同。

对CityHash感兴趣的可以参考:

https://link.zhihu.com/?target=h ... s/121017-slides.pdf

Skipping Index中的应用

- skipping index 中的ngrambf_v1, tokenbf_v1, bloom_filter类型分别都是创建一个bloom filter的索引,核心思想是对通过在内存中维护的Bitmap来对equals/like/has/in等函数进行data block的查询过滤。

-

- registerCreator("ngrambf_v1", bloomFilterIndexCreator);

- registerValidator("ngrambf_v1", bloomFilterIndexValidator);

-

- registerCreator("tokenbf_v1", bloomFilterIndexCreator);

- registerValidator("tokenbf_v1", bloomFilterIndexValidator);

-

- registerCreator("bloom_filter", bloomFilterIndexCreatorNew);

- registerValidator("bloom_filter", bloomFilterIndexValidatorNew);

通常用于字符串/number相关函数的时候会涉及到这部分skipping index的优化:

例如用于ngram的模糊匹配优化:

-

- CREATE TABLE bloom_filter_idx

- (

- k UInt64,

- s String,

- INDEX bf (s, lower(s)) TYPE ngrambf_v1(3, 512, 2, 0) GRANULARITY 1

- ) ENGINE = MergeTree()

- ORDER BY k

- SETTINGS index_granularity = 2;

-

- INSERT INTO bloom_filter_idx VALUES

- (0, 'ClickHouse - столбцовая система управления базами данных (СУБД)'),

- (1, 'ClickHouse is a column-oriented database management system (DBMS)'),

- (2, 'column-oriented database management system'),

- (3, 'columns'),

- (4, 'какая-то строка'),

- (5, 'еще строка'),

- (6, 'some string'),

- (7, 'another string'),

- (8, 'computer science'),

- (9, 'abra'),

- (10, 'cadabra'),

- (11, 'crabacadabra'),

- (12, 'crab'),

- (13, 'basement'),

- (14, 'abracadabra'),

- (15, 'cadabraabra')

-

- SELECT * FROM bloom_filter_idx WHERE multiSearchAny(s, ['base', 'seme', 'gement'])

例如创建了一个bloom filter的大小为512bytes,三个hash function的tokenbf_v1 skipping index表,专门用于hasToken函数的优化。

- CREATE TABLE bloom_filter

- (

- id UInt64,

- s String,

- INDEX tok_bf (s, lower(s)) TYPE tokenbf_v1(512, 3, 0) GRANULARITY 1

- ) ENGINE = MergeTree() ORDER BY id SETTINGS index_granularity = 8;

-

- insert into bloom_filter select number, 'yyy,uuu' from numbers(1024);

- insert into bloom_filter select number+2000, 'abc,def,zzz' from numbers(8);

- insert into bloom_filter select number+3000, 'yyy,uuu' from numbers(1024);

- insert into bloom_filter select number+3000, 'abcdefzzz' from numbers(1024);

-

-

- SELECT max(id) FROM bloom_filter WHERE hasToken(s, 'abc');

- SELECT max(id) FROM bloom_filter WHERE hasToken(s, 'def');

- SELECT max(id) FROM bloom_filter WHERE hasToken(s, 'zzz');

例如创建一个false positive为0.0.1的bloom filter专门用于UUID的查询优化。

- CREATE TABLE 01154_test (x UUID, INDEX ix_x x TYPE bloom_filter(0.01) GRANULARITY 1) ENGINE = MergeTree() ORDER BY x SETTINGS index_granularity=8192;

- INSERT INTO 01154_test VALUES (toUUID('00000000-0000-0000-0000-000000000001')), (toUUID('00000000-0000-0000-0000-000000000002')), (toUUID('00000000-0000-0000-0000-000000000003'));

- SELECT x FROM 01154_test WHERE x = toUUID('00000000-0000-0000-0000-000000000001');

- SELECT x FROM 01154_test WHERE x IN (toUUID('00000000-0000-0000-0000-000000000001'), toUUID('00000000-0000-0000-0000-000000000002'));

- DROP TABLE 01154_test;

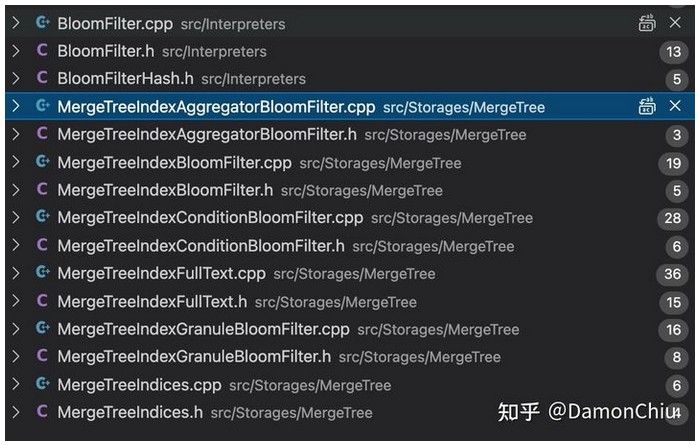

两种Bloom Filter MergeTree Index

- ngrambf 与 tokenbf TYPE skipping index

实现:ClickHouse/src/Storages/MergeTree/MergeTreeIndexFullText.cpp

index类型为MergeTreeIndexFullText,不同点在于tokenizer的实现不同,其中tokenbf的tokenizer的nextInColumn方法应用了SSE4.2的SIMD指令来加速字符串的cmp等操作,加速长字符串的分词。有兴趣可以参考

https://link.zhihu.com/?target=h ... trlen_using_sse_4.2

- // ngrambf

- auto tokenizer = std::make_unique<NgramTokenExtractor>(n);

- // tokenbf

- auto tokenizer = std::make_unique<SplitTokenExtractor>();

-

-

- std::make_shared<MergeTreeIndexFullText>(index, params, std::move(tokenizer)

- bloom_filter TYPE skipping index

- 实现:/ClickHouse/src/Storages/MergeTree/MergeTreeIndexBloomFilter.cpp

-

- index 类型为 MergeTreeIndexBloomFilter

-

- MergeTreeIndexPtr bloomFilterIndexCreatorNew(

- const IndexDescription & index)

- {

- double max_conflict_probability = 0.025;

-

- if (!index.arguments.empty())

- {

- const auto & argument = index.arguments[0];

- max_conflict_probability = std::min(Float64(1), std::max(argument.safeGet<Float64>(), Float64(0)));

- }

-

- const auto & bits_per_row_and_size_of_hash_functions = BloomFilterHash::calculationBestPractices(max_conflict_probability);

-

- return std::make_shared<MergeTreeIndexBloomFilter>(

- index, bits_per_row_and_size_of_hash_functions.first, bits_per_row_and_size_of_hash_functions.second);

- }

根据false positive的概率来决定产生每一行的bit大小和hash function的大小。具体生成逻辑参考: calculationBestPractices方法,该方法背后的数学原理参考:

https://link.zhihu.com/?target=h ... ry-cache/node8.html

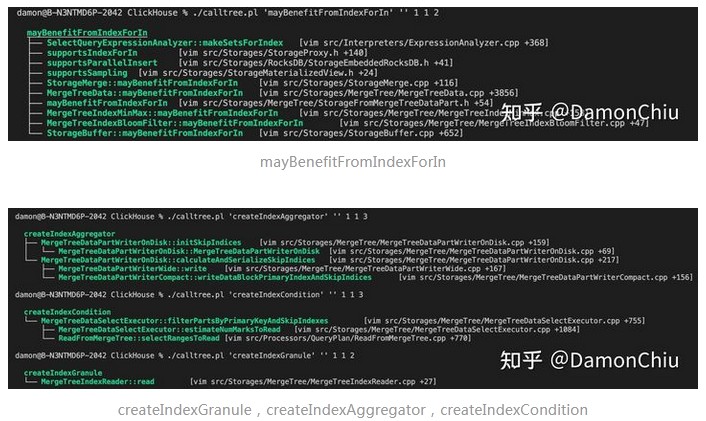

两种bloom filter index都继承自 IMergeTreeIndex,都需要重写父类的以下纯虚方法:

- /// Checks whether the column is in data skipping index.

- virtual bool mayBenefitFromIndexForIn(const ASTPtr & node) const = 0;

-

- virtual MergeTreeIndexGranulePtr createIndexGranule() const = 0;

-

- virtual MergeTreeIndexAggregatorPtr createIndexAggregator() const = 0;

-

- virtual MergeTreeIndexConditionPtr createIndexCondition(

- const SelectQueryInfo & query_info, ContextPtr context) const = 0;

这些函数又在什么地方会使用到呢?

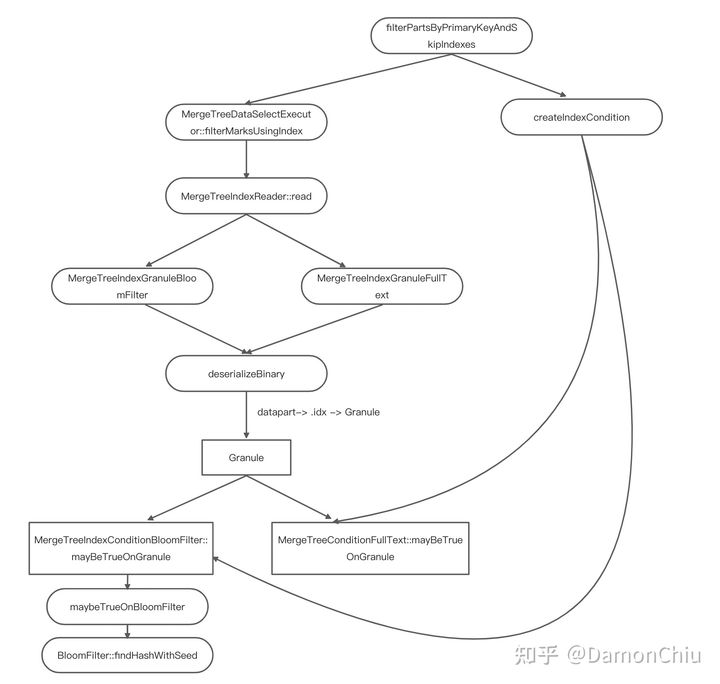

bf的读取

Bloom Filter 用于Skipping Index进一步过滤PK过滤后的Mark Range,每个datapart的skipping idx的bf信息都是存储在内存数据扫描的基本单元——Granule中,每个Granule都存储若干个bf。每次查询Query转化为AST后,再转化成一个逆波兰表达式,获取谓词选择的列,再去判断哪些mark对应的granule中的BF可能包含所要查询的列数据,从而来进一步过滤mark ranges, 进而得知要从datapart的哪些mark ranges去捞block数据。

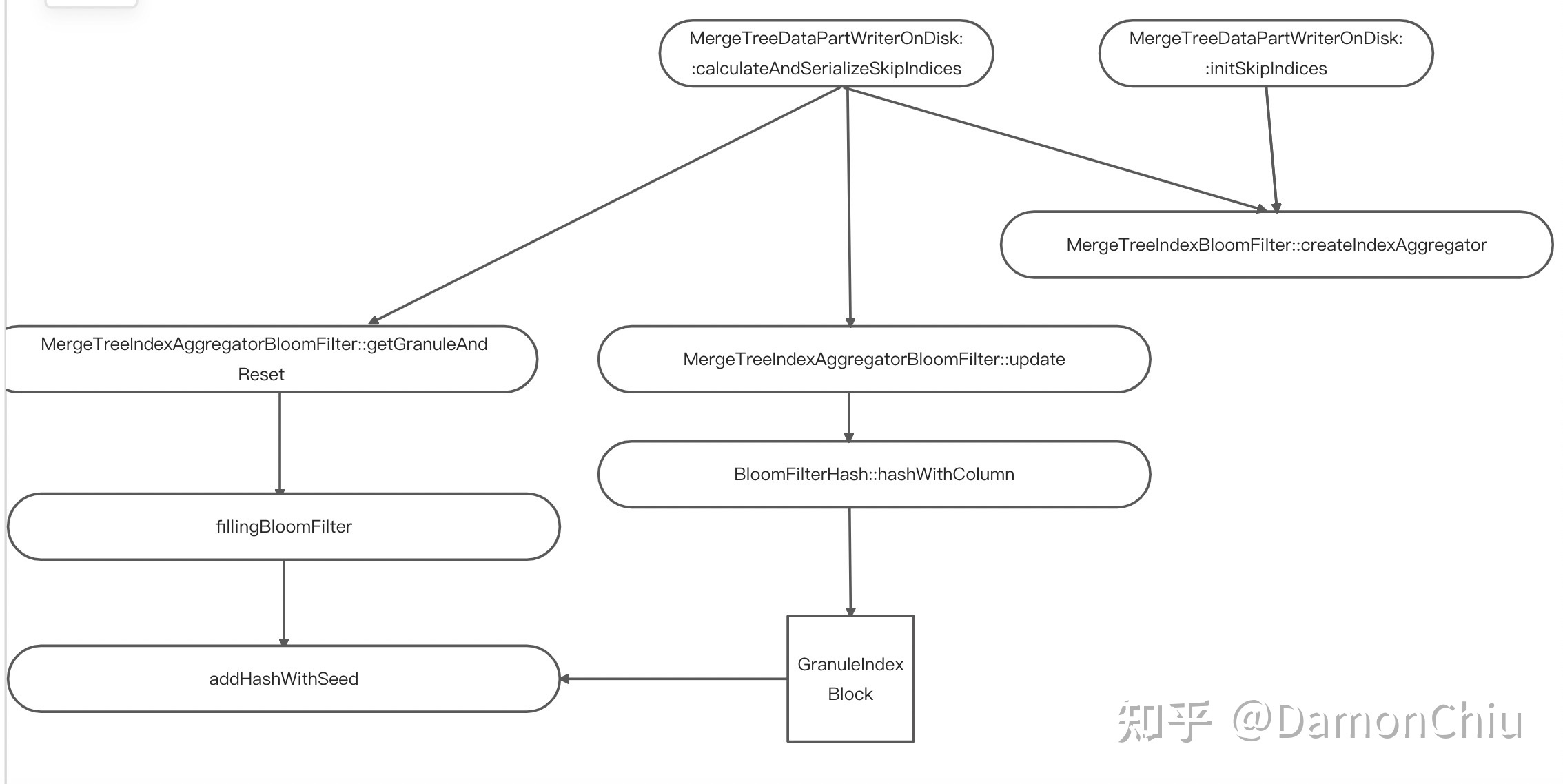

bf的写入

写block的时候,把bf指定的列对应的数据hash到bf中

作者:DamonChiu

来源:https://zhuanlan.zhihu.com/p/395152159

最新经典文章,欢迎关注公众号

|

/2

/2