继续接着上一贴的内容:

精彩内容,不容错过

清单 17. Sqoop 从 DB2 样本数据库导入到 HDFS

- # very much the same as above, just a different jdbc connection

- # and different table name

-

- sqoop import --driver com.ibm.db2.jcc.DB2Driver \

- --connect "jdbc:db2://192.168.1.131:50001/sample" \

- --table staff --username db2inst1 \

- --password db2inst1 -m 1

-

- # Here is another example

- # in this case set the sqoop default schema to be different from

- # the user login schema

-

- sqoop import --driver com.ibm.db2.jcc.DB2Driver \

- --connect "jdbc:db2://192.168.1.3:50001/sample:currentSchema=DB2INST1;" \

- --table helloworld \

- --target-dir "/user/cloudera/sqoopin2" \

- --username marty \

- -P -m 1

-

- # the the schema name is CASE SENSITIVE

- # the -P option prompts for a password that will not be visible in

- # a "ps" listing

使用 Hive:联接 Informix 和 DB2 数据

将数据从 Informix 联接到 DB2 是一个有趣的用例。若只是两个简单的表格,并不会非常令人兴奋,但对于多个 TB 或 PB 的数据则是一个巨大的胜利。

联接不同的数据源有两种基本方法:让数据静止,并使用联邦技术与将数据移动到单一存储,以执行联接。Hadoop 的经济性和性能使得将数据移入 HDFS 和使用 MapReduce 执行繁重的工作成为一个必然的选择。如果试图使用联邦技术联接静止状态的数据,网络带宽的限制会造成一个基本障碍。有关联邦的更多信息,请参阅 参考资料 部分。

Hive 提供了一个在集群上运行的 SQL 子集。Hive 不提供事务语义,也不是 Informix 或 DB2 的替代品。如果您有一些繁重的工作(如表联接),那么即使您的一些表规模较小,但需要执行讨厌的 Cartesian 产品,Hadoop 也是首选的工具。

要使用 Hive 查询语言,需要称为 Hiveql 表元数据的 SQL 子集。您可以针对 HDFS 中的现有文件定义元数据。Sqoop 利用 create-hive-table 选项提供了一个便捷的快捷方式。

MySQL 用户可随时调整清单 18 中的示例。将 MySQL 或任何其他关系数据库表联接到大型电子表格,这将是一个有趣的练习。

清单 18. 将 informix.customer 表联接到 db2.staff 表

- # import the customer table into Hive

- $ sqoop import --driver com.informix.jdbc.IfxDriver \

- --connect \

- "jdbc:informix-sqli://myhost:54321/stores_demo:informixserver=ifx;user=me;password=you" \

- --table customer

-

- # now tell hive where to find the informix data

-

- # to get to the hive command prompt just type in hive

-

- $ hive

- Hive history file=/tmp/cloudera/yada_yada_log123.txt

- hive>

-

- # here is the hiveql you need to create the tables

- # using a file is easier than typing

-

- create external table customer (

- cn int,

- fname string,

- lname string,

- company string,

- addr1 string,

- addr2 string,

- city string,

- state string,

- zip string,

- phone string)

- ROW FORMAT DELIMITED FIELDS TERMINATED BY ','

- LOCATION '/user/cloudera/customer'

- ;

-

- # we already imported the db2 staff table above

-

- # now tell hive where to find the db2 data

- create external table staff (

- id int,

- name string,

- dept string,

- job string,

- years string,

- salary float,

- comm float)

- ROW FORMAT DELIMITED FIELDS TERMINATED BY ','

- LOCATION '/user/cloudera/staff'

- ;

-

- # you can put the commands in a file

- # and execute them as follows:

-

- $ hive -f hivestaff

- Hive history file=/tmp/cloudera/hive_job_log_cloudera_201208101502_2140728119.txt

- OK

- Time taken: 3.247 seconds

- OK

- 10 Sanders 20 Mgr 7 98357.5 NULL

- 20 Pernal 20 Sales 8 78171.25 612.45

- 30 Marenghi 38 Mgr 5 77506.75 NULL

- 40 O'Brien 38 Sales 6 78006.0 846.55

- 50 Hanes 15 Mgr 10 80

- ... lines deleted

-

- # now for the join we've all been waiting for :-)

-

- # this is a simple case, Hadoop can scale well into the petabyte range!

-

- $ hive

- Hive history file=/tmp/cloudera/hive_job_log_cloudera_201208101548_497937669.txt

- hive> select customer.cn, staff.name,

- > customer.addr1, customer.city, customer.phone

- > from staff join customer

- > on ( staff.id = customer.cn );

- Total MapReduce jobs = 1

- Launching Job 1 out of 1

- Number of reduce tasks not specified. Estimated from input data size: 1

- In order to change the average load for a reducer (in bytes):

- set hive.exec.reducers.bytes.per.reducer=number

- In order to limit the maximum number of reducers:

- set hive.exec.reducers.max=number

- In order to set a constant number of reducers:

- set mapred.reduce.tasks=number

- Starting Job = job_201208101425_0005,

- Tracking URL = http://0.0.0.0:50030/jobdetails.jsp?jobid=job_201208101425_0005

- Kill Command = /usr/lib/hadoop/bin/hadoop

- job -Dmapred.job.tracker=0.0.0.0:8021 -kill job_201208101425_0005

- 2012-08-10 15:49:07,538 Stage-1 map = 0%, reduce = 0%

- 2012-08-10 15:49:11,569 Stage-1 map = 50%, reduce = 0%

- 2012-08-10 15:49:12,574 Stage-1 map = 100%, reduce = 0%

- 2012-08-10 15:49:19,686 Stage-1 map = 100%, reduce = 33%

- 2012-08-10 15:49:20,692 Stage-1 map = 100%, reduce = 100%

- Ended Job = job_201208101425_0005

- OK

- 110 Ngan 520 Topaz Way Redwood City 415-743-3611

- 120 Naughton 6627 N. 17th Way Phoenix 602-265-8754

- Time taken: 22.764 seconds

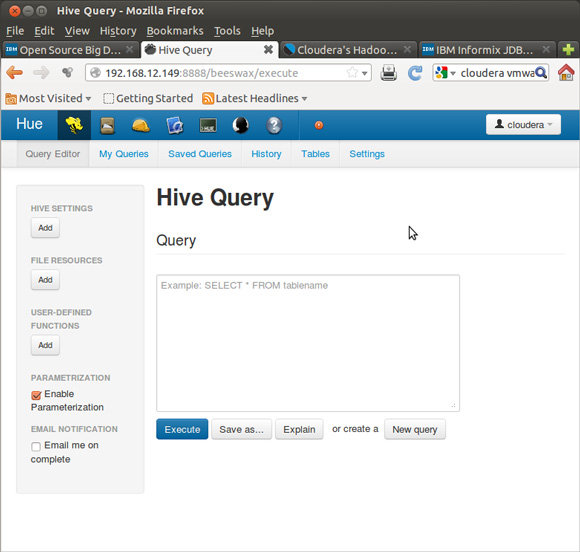

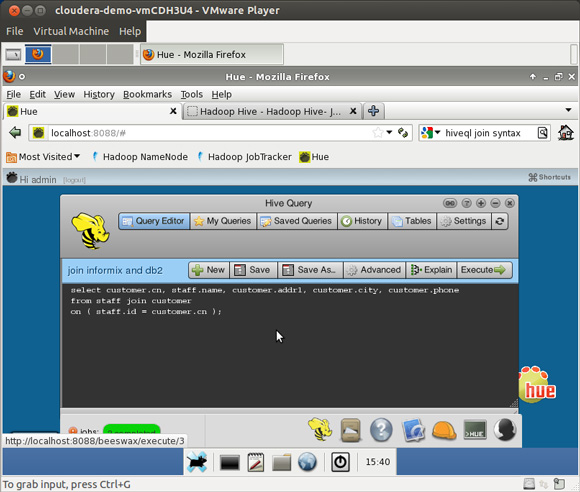

若您使用 Hue 实现图形化浏览器界面,它会更漂亮,如图 9、图 10 和图 11 所示。

图 9. CDH4 中的 Hue Beeswax GUI for Hive,查看 Hiveql 查询

图 10. Hue Beeswax GUI for Hive,查看 Hiveql 查询

图 11. Hue Beeswax 图形化浏览器,查看 Informix-DB2 联接结果

使用 Pig:联接 Informix 和 DB2 数据

Pig 是一种过程语言。就像 Hive 一样,它在幕后生成 MapReduce 代码。随着越来越多的项目可用,Hadoop 的易用性将继续提高。虽然我们中的一些人真的很喜欢命令行,但有几个图形用户界面与 Hadoop 配合得很好。

清单 19 显示的 Pig 代码用于联接前面示例中的 customer 表和 staff 表。

清单 19. 将 Informix 表联接到 DB2 表的 Pig 示例

- $ pig

- grunt> staffdb2 = load 'staff' using PigStorage(',')

- >> as ( id, name, dept, job, years, salary, comm );

- grunt> custifx2 = load 'customer' using PigStorage(',') as

- >> (cn, fname, lname, company, addr1, addr2, city, state, zip, phone)

- >> ;

- grunt> joined = join custifx2 by cn, staffdb2 by id;

-

- # to make pig generate a result set use the dump command

- # no work has happened up till now

-

- grunt> dump joined;

- 2012-08-11 21:24:51,848 [main] INFO org.apache.pig.tools.pigstats.ScriptState

- - Pig features used in the script: HASH_JOIN

- 2012-08-11 21:24:51,848 [main] INFO org.apache.pig.backend.hadoop.executionengine

- .HExecutionEngine - pig.usenewlogicalplan is set to true.

- New logical plan will be used.

-

- HadoopVersion PigVersion UserId StartedAt FinishedAt Features

- 0.20.2-cdh3u4 0.8.1-cdh3u4 cloudera 2012-08-11 21:24:51

- 2012-08-11 21:25:19 HASH_JOIN

-

- Success!

-

- Job Stats (time in seconds):

- JobId Maps Reduces MaxMapTime MinMapTIme AvgMapTime

- MaxReduceTime MinReduceTime AvgReduceTime Alias Feature Outputs

- job_201208111415_0006 2 1 8 8 8 10 10 10

- custifx,joined,staffdb2 HASH_JOIN hdfs://0.0.0.0/tmp/temp1785920264/tmp-388629360,

-

- Input(s):

- Successfully read 35 records from: "hdfs://0.0.0.0/user/cloudera/staff"

- Successfully read 28 records from: "hdfs://0.0.0.0/user/cloudera/customer"

-

- Output(s):

- Successfully stored 2 records (377 bytes) in:

- "hdfs://0.0.0.0/tmp/temp1785920264/tmp-388629360"

-

- Counters:

- Total records written : 2

- Total bytes written : 377

- Spillable Memory Manager spill count : 0

- Total bags proactively spilled: 0

- Total records proactively spilled: 0

-

- Job DAG:

- job_201208111415_0006

-

- 2012-08-11 21:25:19,145 [main] INFO

- org.apache.pig.backend.hadoop.executionengine.mapReduceLayer.MapReduceLauncher - Success!

- 2012-08-11 21:25:19,149 [main] INFO org.apache.hadoop.mapreduce.lib.

- input.FileInputFormat - Total input paths to process : 1

- 2012-08-11 21:25:19,149 [main] INFO org.apache.pig.backend.hadoop.

- executionengine.util.MapRedUtil - Total input paths to process : 1

- (110,Roy ,Jaeger ,AA Athletics ,520 Topaz Way

- ,null,Redwood City ,CA,94062,415-743-3611 ,110,Ngan,15,Clerk,5,42508.20,206.60)

- (120,Fred ,Jewell ,Century Pro Shop ,6627 N. 17th Way

- ,null,Phoenix ,AZ,85016,602-265-8754

- ,120,Naughton,38,Clerk,null,42954.75,180.00)

我如何选择 Java、Hive 还是 Pig?

您有多种选择来进行 Hadoop 编程,而且最好先查看用例,选择合适的工具来完成工作。您可以不局限于处理关系型数据,但本文的重点是 Informix、DB2 和 Hadoop 的良好配合。编写几百行 Java 来实施关系型散列联接完全是浪费时间,因为 Hadoop 的 MapReduce 算法已经可用。您如何选择?这是一个个人喜好的问题。有些人喜欢 SQL 中的编码集操作。有些人喜欢过程代码。您应该选择让您效率最高的语言。如果您有多个关系数据库系统,并希望以低廉的价格通过强大的性能整合所有数据,那么 Hadoop、MapReduce、Hive 和 Pig 随时可以提供帮助。

不要删除您的数据:将分区从 Informix 滚动到 HDFS

大多数现代关系型数据库都可以对数据进行分区。一个常见的用例是按时间段进行分区。固定窗口的数据被存储,例如,滚动 18 个月的间隔,在此之后的数据被归档。分离分区的功能非常强大。但分区被分离后,该怎么处理数据呢?

旧数据的磁带归档是摒弃旧数据的一种非常昂贵的方式。一旦移动到可访问性较低的介质,除非有法定的审计要求,否则数据将极少被访问。Hadoop 提供了一种更好的替代方案。

将归档数据从旧分区移动到 Hadoop,这提供了高性能的访问,而且比起将数据保存在原来的事务或数据集市/数据仓库系统中的成本要低得多。数据太旧会失去事务性价值,但对于组织的长期分析仍然非常有价值。之前的 Sqoop 示例提供了如何将这些数据从关系分区移动到 HDFS 的基本知识。

Fuse - 通过 NFS 访问 HDFS 文件

可以通过 NFS 访问 HDFS 中的 Informix/DB2/平面文件数据,如清单 20 所示。这提供了命令行操作,但无需使用 “hadoop fs -yadayada” 界面。从技术用例的角度来看,NFS 在大数据环境受到严重限制,但所包含的这些示例是面向开发者的,并且数据不是太大。

清单 20. 设置 Fuse - 通过 NFS 访问您的 HDFS 数据

- # this is for CDH4, the CDH3 image doesn't have fuse installed...

- $ mkdir fusemnt

- $ sudo hadoop-fuse-dfs dfs://localhost:8020 fusemnt/

- INFO fuse_options.c:162 Adding FUSE arg fusemnt/

- $ ls fusemnt

- tmp user var

- $ ls fusemnt/user

- cloudera hive

- $ ls fusemnt/user/cloudera

- customer DS.txt.gz HF.out HF.txt orders staff

- $ cat fusemnt/user/cloudera/orders/part-m-00001

- 1007,2008-05-31,117,null,n,278693 ,2008-06-05,125.90,25.20,null

- 1008,2008-06-07,110,closed Monday

- ,y,LZ230 ,2008-07-06,45.60,13.80,2008-07-21

- 1009,2008-06-14,111,next door to grocery

- ,n,4745 ,2008-06-21,20.40,10.00,2008-08-21

- 1010,2008-06-17,115,deliver 776 King St. if no answer

- ,n,429Q ,2008-06-29,40.60,12.30,2008-08-22

- 1011,2008-06-18,104,express

- ,n,B77897 ,2008-07-03,10.40,5.00,2008-08-29

- 1012,2008-06-18,117,null,n,278701 ,2008-06-29,70.80,14.20,null

Flume - 创建一个加载就绪的文件

Flume next generation(也称为 flume-ng)是一个高速并行加载程序。数据库具有高速加载程序,那么,它们如何良好配合呢?Flume-ng 的关系型用例是在本地或远程创建一个加载就绪的文件,那么关系服务器就可以使用其高速加载程序。是的,此功能与 Sqoop 重叠,但在清单 21 中所示的脚本是根据客户专门针对这种风格的数据库加载的要求而创建的。

清单 21. 将 HDFS 数据导出到一个平面文件,以供数据库进行加载

- [ DISCUZ_CODE_44 ]nbsp; sudo yum install flume-ng

-

- $ cat flumeconf/hdfs2dbloadfile.conf

- #

- # started with example from flume-ng documentation

- # modified to do hdfs source to file sink

- #

-

- # Define a memory channel called ch1 on agent1

- agent1.channels.ch1.type = memory

-

- # Define an exec source called exec-source1 on agent1 and tell it

- # to bind to 0.0.0.0:31313. Connect it to channel ch1.

- agent1.sources.exec-source1.channels = ch1

- agent1.sources.exec-source1.type = exec

- agent1.sources.exec-source1.command =hadoop fs -cat /user/cloudera/orders/part-m-00001

- # this also works for all the files in the hdfs directory

- # agent1.sources.exec-source1.command =hadoop fs

- # -cat /user/cloudera/tsortin/*

- agent1.sources.exec-source1.bind = 0.0.0.0

- agent1.sources.exec-source1.port = 31313

-

- # Define a logger sink that simply file rolls

- # and connect it to the other end of the same channel.

- agent1.sinks.fileroll-sink1.channel = ch1

- agent1.sinks.fileroll-sink1.type = FILE_ROLL

- agent1.sinks.fileroll-sink1.sink.directory =/tmp

-

- # Finally, now that we've defined all of our components, tell

- # agent1 which ones we want to activate.

- agent1.channels = ch1

- agent1.sources = exec-source1

- agent1.sinks = fileroll-sink1

-

- # now time to run the script

-

- $ flume-ng agent --conf ./flumeconf/ -f ./flumeconf/hdfs2dbloadfile.conf -n

- agent1

-

- # here is the output file

- # don't forget to stop flume - it will keep polling by default and generate

- # more files

-

- $ cat /tmp/1344780561160-1

- 1007,2008-05-31,117,null,n,278693 ,2008-06-05,125.90,25.20,null

- 1008,2008-06-07,110,closed Monday ,y,LZ230 ,2008-07-06,45.60,13.80,2008-07-21

- 1009,2008-06-14,111,next door to ,n,4745 ,2008-06-21,20.40,10.00,2008-08-21

- 1010,2008-06-17,115,deliver 776 King St. if no answer ,n,429Q

- ,2008-06-29,40.60,12.30,2008-08-22

- 1011,2008-06-18,104,express ,n,B77897 ,2008-07-03,10.40,5.00,2008-08-29

- 1012,2008-06-18,117,null,n,278701 ,2008-06-29,70.80,14.20,null

-

- # jump over to dbaccess and use the greatest

- # data loader in informix: the external table

- # external tables were actually developed for

- # informix XPS back in the 1996 timeframe

- # and are now available in may servers

-

- #

- drop table eorders;

- create external table eorders

- (on char(10),

- mydate char(18),

- foo char(18),

- bar char(18),

- f4 char(18),

- f5 char(18),

- f6 char(18),

- f7 char(18),

- f8 char(18),

- f9 char(18)

- )

- using (datafiles ("disk:/tmp/myfoo" ) , delimiter ",");

- select * from eorders;

Oozie - 为多个作业添加工作流

Oozie 将多个 Hadoop 作业链接在一起。有一组很好的示例,其中包含清单 22 所示的代码集里使用的 oozie。

清单 22. 使用 oozie 进行作业控制

- # This sample is for CDH3

-

- # untar the examples

-

- # CDH4

- $ tar -zxvf /usr/share/doc/oozie-3.1.3+154/oozie-examples.tar.gz

-

- # CDH3

- $ tar -zxvf /usr/share/doc/oozie-2.3.2+27.19/oozie-examples.tar.gz

-

- # cd to the directory where the examples live

- # you MUST put these jobs into the hdfs store to run them

-

- [ DISCUZ_CODE_45 ]nbsp; hadoop fs -put examples examples

-

- # start up the oozie server - you need to be the oozie user

- # since the oozie user is a non-login id use the following su trick

-

- # CDH4

- $ sudo su - oozie -s /usr/lib/oozie/bin/oozie-sys.sh start

-

- # CDH3

- $ sudo su - oozie -s /usr/lib/oozie/bin/oozie-start.sh

-

- # checkthe status

- oozie admin -oozie http://localhost:11000/oozie -status

- System mode: NORMAL

-

- # some jar housekeeping so oozie can find what it needs

-

- $ cp /usr/lib/sqoop/sqoop-1.3.0-cdh3u4.jar examples/apps/sqoop/lib/

- $ cp /home/cloudera/Informix_JDBC_Driver/lib/ifxjdbc.jar examples/apps/sqoop/lib/

- $ cp /home/cloudera/Informix_JDBC_Driver/lib/ifxjdbcx.jar examples/apps/sqoop/lib/

-

- # edit the workflow.xml file to use your relational database:

-

- #################################

- <command> import

- --driver com.informix.jdbc.IfxDriver

- --connect jdbc:informix-sqli://192.168.1.143:54321/stores_demo:informixserver=ifx117

- --table orders --username informix --password useyours

- --target-dir /user/${wf:user()}/${examplesRoot}/output-data/sqoop --verbose<command>

- #################################

-

- # from the directory where you un-tarred the examples file do the following:

-

- $ hrmr examples;hput examples examples

-

- # now you can run your sqoop job by submitting it to oozie

-

- [ DISCUZ_CODE_45 ]nbsp; oozie job -oozie http://localhost:11000/oozie -config \

- examples/apps/sqoop/job.properties -run

-

- job: 0000000-120812115858174-oozie-oozi-W

-

- # get the job status from the oozie server

-

- $ oozie job -oozie http://localhost:11000/oozie -info 0000000-120812115858174-oozie-oozi-W

- Job ID : 0000000-120812115858174-oozie-oozi-W

- -----------------------------------------------------------------------

- Workflow Name : sqoop-wf

- App Path : hdfs://localhost:8020/user/cloudera/examples/apps/sqoop/workflow.xml

- Status : SUCCEEDED

- Run : 0

- User : cloudera

- Group : users

- Created : 2012-08-12 16:05

- Started : 2012-08-12 16:05

- Last Modified : 2012-08-12 16:05

- Ended : 2012-08-12 16:05

-

- Actions

- ----------------------------------------------------------------------

- ID Status Ext ID Ext Status Err Code

- ---------------------------------------------------------------------

- 0000000-120812115858174-oozie-oozi-W@sqoop-node OK

- job_201208120930_0005 SUCCEEDED -

- --------------------------------------------------------------------

-

- # how to kill a job may come in useful at some point

-

- oozie job -oozie http://localhost:11000/oozie -kill

- 0000013-120812115858174-oozie-oozi-W

-

- # job output will be in the file tree

- $ hcat /user/cloudera/examples/output-data/sqoop/part-m-00003

- 1018,2008-07-10,121,SW corner of Biltmore Mall ,n,S22942

- ,2008-07-13,70.50,20.00,2008-08-06

- 1019,2008-07-11,122,closed till noon Mondays ,n,Z55709

- ,2008-07-16,90.00,23.00,2008-08-06

- 1020,2008-07-11,123,express ,n,W2286

- ,2008-07-16,14.00,8.50,2008-09-20

- 1021,2008-07-23,124,ask for Elaine ,n,C3288

- ,2008-07-25,40.00,12.00,2008-08-22

- 1022,2008-07-24,126,express ,n,W9925

- ,2008-07-30,15.00,13.00,2008-09-02

- 1023,2008-07-24,127,no deliveries after 3 p.m. ,n,KF2961

- ,2008-07-30,60.00,18.00,2008-08-22

-

-

- # if you run into this error there is a good chance that your

- # database lock file is owned by root

- [ DISCUZ_CODE_45 ]nbsp; oozie job -oozie http://localhost:11000/oozie -config \

- examples/apps/sqoop/job.properties -run

-

- Error: E0607 : E0607: Other error in operation [<openjpa-1.2.1-r752877:753278

- fatal store error> org.apache.openjpa.persistence.RollbackException:

- The transaction has been rolled back. See the nested exceptions for

- details on the errors that occurred.], {1}

-

- # fix this as follows

- $ sudo chown oozie:oozie /var/lib/oozie/oozie-db/db.lck

-

- # and restart the oozie server

- $ sudo su - oozie -s /usr/lib/oozie/bin/oozie-stop.sh

- $ sudo su - oozie -s /usr/lib/oozie/bin/oozie-start.sh

HBase:一个高性能的键值存储

HBase 是一个高性能的键值存储。如果您的用例需要可扩展性,并且只需要相当于能自动提交事务的数据库,那么 HBase 很可能就是适合的技术。HBase 不是一个数据库。这个名称起得不好,因为对于某些人来说,术语 base 意味着数据库。它为高性能键值存储做了出色的工作。HBase、Informix、DB2 和其他关系数据库的功能之间有一些重叠。对于 ACID 事务、完整的 SQL 合规性和多个索引来说,传统的关系型数据库是显而易见的选择。

最后这个代码练习提供对 HBase 的基本了解。这个练习设计简单,并且不代表 HBase 的功能范围。请用该示例来了解 HBase 的一些基本功能。如果您打算在您的特定用例中实施或拒绝 HBase,那么由 Lars George 编写的 “HBase, The Definitive Guide” 是必读书籍之一。

如清单 23 和清单 24 所示,最后这个示例使用 HBase 提供的 REST 接口将键值插入 HBase 表。测试工具是基于旋度的。

清单 23. 创建一个 HBase 表并插入一行

- # enter the command line shell for hbase

-

- $ hbase shell

- HBase Shell; enter 'help<RETURN> for list of supported commands.

- Type "exit<RETURN> to leave the HBase Shell

- Version 0.90.6-cdh3u4, r, Mon May 7 13:14:00 PDT 2012

-

- # create a table with a single column family

-

- hbase(main):001:0> create 'mytable', 'mycolfamily'

-

- # if you get errors from hbase you need to fix the

- # network config

-

- # here is a sample of the error:

-

- ERROR: org.apache.hadoop.hbase.ZooKeeperConnectionException: HBase

- is able to connect to ZooKeeper but the connection closes immediately.

- This could be a sign that the server has too many connections

- (30 is the default). Consider inspecting your ZK server logs for

- that error and then make sure you are reusing HBaseConfiguration

- as often as you can. See HTable's javadoc for more information.

-

- # fix networking:

-

- # add the eth0 interface to /etc/hosts with a hostname

-

- $ sudo su -

- # ifconfig | grep addr

- eth0 Link encap:Ethernet HWaddr 00:0C:29:8C:C7:70

- inet addr:192.168.1.134 Bcast:192.168.1.255 Mask:255.255.255.0

- Interrupt:177 Base address:0x1400

- inet addr:127.0.0.1 Mask:255.0.0.0

- [root@myhost ~]# hostname myhost

- [root@myhost ~]# echo "192.168.1.134 myhost" >gt; /etc/hosts

- [root@myhost ~]# cd /etc/init.d

-

- # now that the host and address are defined restart Hadoop

-

- [root@myhost init.d]# for i in hadoop*

- > do

- > ./$i restart

- > done

-

- # now try table create again:

-

- $ hbase shell

- HBase Shell; enter 'help<RETURN> for list of supported commands.

- Type "exit<RETURN> to leave the HBase Shell

- Version 0.90.6-cdh3u4, r, Mon May 7 13:14:00 PDT 2012

-

- hbase(main):001:0> create 'mytable' , 'mycolfamily'

- 0 row(s) in 1.0920 seconds

-

- hbase(main):002:0>

-

- # insert a row into the table you created

- # use some simple telephone call log data

- # Notice that mycolfamily can have multiple cells

- # this is very troubling for DBAs at first, but

- # you do get used to it

-

- hbase(main):001:0> put 'mytable', 'key123', 'mycolfamily:number','6175551212'

- 0 row(s) in 0.5180 seconds

- hbase(main):002:0> put 'mytable', 'key123', 'mycolfamily:duration','25'

-

- # now describe and then scan the table

-

- hbase(main):005:0> describe 'mytable'

- DESCRIPTION ENABLED

- {NAME => 'mytable', FAMILIES => [{NAME => 'mycolfam true

- ily', BLOOMFILTER => 'NONE', REPLICATION_SCOPE => '

- 0', COMPRESSION => 'NONE', VERSIONS => '3', TTL =>

- '2147483647', BLOCKSIZE => '65536', IN_MEMORY => 'f

- alse', BLOCKCACHE => 'true'}]}

- 1 row(s) in 0.2250 seconds

-

- # notice that timestamps are included

-

- hbase(main):007:0> scan 'mytable'

- ROW COLUMN+CELL

- key123 column=mycolfamily:duration,

- timestamp=1346868499125, value=25

- key123 column=mycolfamily:number,

- timestamp=1346868540850, value=6175551212

- 1 row(s) in 0.0250 seconds

清单 24. 使用 HBase REST 接口

- # HBase includes a REST server

-

- $ hbase rest start -p 9393 &

-

- # you get a bunch of messages....

-

- # get the status of the HBase server

-

- $ curl http://localhost:9393/status/cluster

-

- # lots of output...

- # many lines deleted...

-

- mytable,,1346866763530.a00f443084f21c0eea4a075bbfdfc292.

- stores=1

- storefiless=0

- storefileSizeMB=0

- memstoreSizeMB=0

- storefileIndexSizeMB=0

-

- # now scan the contents of mytable

-

- $ curl http://localhost:9393/mytable/*

-

- # lines deleted

- 12/09/05 15:08:49 DEBUG client.HTable$ClientScanner:

- Finished with scanning at REGION =>

- # lines deleted

- <?xml version="1.0" encoding="UTF-8" standalone="yes"?>

- <CellSet><Row key="a2V5MTIz">

- <Cell timestamp="1346868499125" column="bXljb2xmYW1pbHk6ZHVyYXRpb24=">MjU=</Cell>

- <Cell timestamp="1346868540850" column="bXljb2xmYW1pbHk6bnVtYmVy">NjE3NTU1MTIxMg==</Cell>

- <Cell timestamp="1346868425844" column="bXljb2xmYW1pbHk6bnVtYmVy">NjE3NTU1MTIxMg==</Cell>

- </Row></CellSet>

-

- # the values from the REST interface are base64 encoded

- $ echo a2V5MTIz | base64 -d

- key123

- $ echo bXljb2xmYW1pbHk6bnVtYmVy | base64 -d

- mycolfamily:number

-

- # The table scan above gives the schema needed to insert into the HBase table

-

- $ echo RESTinsertedKey | base64

- UkVTVGluc2VydGVkS2V5Cg==

-

- $ echo 7815551212 | base64

- NzgxNTU1MTIxMgo=

-

- # add a table entry with a key value of "RESTinsertedKey" and

- # a phone number of "7815551212"

-

- # note - curl is all on one line

- [ DISCUZ_CODE_47 ]nbsp; curl -H "Content-Type: text/xml" -d '<CellSet>

- <Row key="UkVTVGluc2VydGVkS2V5Cg==">

- <Cell column="bXljb2xmYW1pbHk6bnVtYmVy">NzgxNTU1MTIxMgo=<Cell>

- <Row><CellSet> http://192.168.1.134:9393/mytable/dummykey

-

- 12/09/05 15:52:34 DEBUG rest.RowResource: POST http://192.168.1.134:9393/mytable/dummykey

- 12/09/05 15:52:34 DEBUG rest.RowResource: PUT row=RESTinsertedKey\x0A,

- families={(family=mycolfamily,

- keyvalues=(RESTinsertedKey\x0A/mycolfamily:number/9223372036854775807/Put/vlen=11)}

-

- # trust, but verify

-

- hbase(main):002:0> scan 'mytable'

- ROW COLUMN+CELL

- RESTinsertedKey\x0A column=mycolfamily:number,timestamp=1346874754883,value=7815551212\x0A

- key123 column=mycolfamily:duration, timestamp=1346868499125, value=25

- key123 column=mycolfamily:number, timestamp=1346868540850, value=6175551212

- 2 row(s) in 0.5610 seconds

-

- # notice the \x0A at the end of the key and value

- # this is the newline generated by the "echo" command

- # lets fix that

-

- $ printf 8885551212 | base64

- ODg4NTU1MTIxMg==

-

- $ printf mykey | base64

- bXlrZXk=

-

- # note - curl statement is all on one line!

- curl -H "Content-Type: text/xml" -d '<CellSet><Row key="bXlrZXk=">

- <Cell column="bXljb2xmYW1pbHk6bnVtYmVy">ODg4NTU1MTIxMg==<Cell>

- <Row><CellSet>

- http://192.168.1.134:9393/mytable/dummykey

-

- # trust but verify

- hbase(main):001:0> scan 'mytable'

- ROW COLUMN+CELL

- RESTinsertedKey\x0A column=mycolfamily:number,timestamp=1346875811168,value=7815551212\x0A

- key123 column=mycolfamily:duration, timestamp=1346868499125, value=25

- key123 column=mycolfamily:number, timestamp=1346868540850, value=6175551212

- mykey column=mycolfamily:number, timestamp=1346877875638, value=8885551212

- 3 row(s) in 0.6100 seconds

结束语哇,您坚持到了最后,做得好! 如果你从帖子1、到2、再到3,耐心看完了。(也许,你虽然并未实际操作,但你已经很用心了)。 在这里,我们将自己看到的、知道的、优秀的内容分享出来,供大家学习讨论。优秀内容,就该大家一起学习。 闷热的夏天,烦躁的心。 如果你的能力还不足以撑起你的欲望,请静下心来学习!!

|

/2

/2